; Date: Thu Feb 06 2020

Tags: Docker »»»» Docker Compose »»»» AWS ECS »»»»

Deploying Docker services to AWS ECS is becoming much simpler thanks to new feature collaboration between the Docker and AWS teams. It is now possible to take a normal Docker Compose file, using modern Compose features, and deploy directly to AWS. Nothing complex or unusual is required, with all the complexity handled under the covers with a generated CloudFormation file. In this article we'll take a first look at using these features, which are in early release right now.

AWS ECS is a service for deploying Containers on AWS infrastructure. That's an exciting idea, since Containers are such a flexible deployment tool, until you look into how hard it is to accomplish. For an example see my earlier tutorial Terraform deployment of a simple multi-tier Node.js and Nginx deployment to AWS ECS , in which we were required to configure a long list of complicated objects, and then create Service and Task definition files, and much more.

AWS offers a CLI tool for deployment to ECS, one that promises to simplify deploying Docker containers on ECS. This tool even accepted Compose files, but its implementation of the Compose spec was way out of date, and you were required to accompany the Compose file with another file that was inadequately documented.

What the Docker and AWS teams have developed is a way to use a modern Docker Compose file along with the new docker compose command. That's it, and we can deploy to ECS. Instead of using that second file we can use x-aws- parameters to specify AWS-specific settings.

Look at that paragraph carefully. Notice I wrote docker compose command, rather than docker-compose. Until now using Docker Compose required using the docker-compose command, but that's no more. Instead, Docker Compose functionality is now being integrated as a new docker compose command.

Another change is expanding the docker context command to integrate AWS ECS support. The Context feature lets us do remote control of Docker hosts (see Using SSH to remotely control a Docker Engine or Docker Swarm in two easy steps). Generally speaking, a Context lets us run Docker commands on our laptop that affect a remote Docker installation. In this case, we can now use a Docker Context to remotely control a AWS ECS deployment.

In this article we'll go over a couple simple examples of Docker Compose deployment to AWS ECS.

Configuration and setup

To use these features you must have the bleeding edge version of Docker installed. I am on a Mac, hence have Docker Desktop for Mac installed, with version 2.3.6.1 installed, and I am on the "Edge Channel". To learn how to enable this,

read the Docker config file documentation and look at the section on enabling experimental features.

In the Docker Desktop application, go to the Preferences of the Desktop UI. In the Docker Engine tab you'll have an opportunity to add a JSON configuration file. I am using this:

{

"experimental": true,

"debug": true

}

You then select Check for Updates, and let it update. You'll have success if this command does not give you an error:

$ docker compose

It should instead print out a usage summary of that command.

The next setup required is ensuring you have an AWS account, and the AWS CLI installed. For some advice see Setting up the AWS Command-Line-Interface (CLI) tool on your laptop. It is required that the AWS CLI be configured with at least one AWS profile, and that you have already verified success with it. The AWS CLI setup article has some recommendations about that.

While there is a manual way to set up an ECS Context, the preferred way is this:

$ docker context create ecs ecs

? Create a Docker context using: [Use arrows to move, type to filter]

> An existing AWS profile

AWS secret and token credentials

AWS environment variables

This will create an ECS Context named ecs, by interactively walking you through the process. The three modes it handles are:

- Select an existing AWS Profile to use

- Enter the AWS authentication tokens

- Instead tell Docker to look at the

AWS_PROFILEenvironment variable

Docker uses the setup of the context to determine both the AWS authentication tokens, and the AWS region, to use for deploying or managing a service. For example, in the first case the region chosen is what's listed for the profile in the AWS configuration files. But when using environment variables, the region is whatever is set in AWS_REGION or AWS_DEFAULT_REGION.

It's recommended to use the third variety, that uses environment variables. To configure set these environment variables:

$ export AWS_PROFILE=example-aws-profile

$ export AWS_REGION=us-west-2

Once you've created the context, you can run this:

$ docker context list

NAME TYPE DESCRIPTION DOCKER ENDPOINT KUBERNETES ENDPOINT ORCHESTRATOR

default moby Current DOCKER_HOST based configuration unix:///var/run/docker.sock swarm

ecs * ecs us-west-2

The default context is your local machine, and the ecs context is your AWS account.

The Docker Context feature is very powerful, letting you have remote control over a Docker host, and letting you switch back and forth from one Docker host to another.

Quick start

The ECS-based Docker context is, by design, not fully featured. For instance we're prevented from using the docker exec command on an ECS-based container. A part of the new paradigm for Docker Context, is that each context advertises its capabilities, and Docker prevents you from use the features not supported by a given context. For example:

$ docker context show

ecs

$ docker run --name nginx -p 80:80 nginx

Command "run" not available in current context (ecs)

$ ls

Dockerfile

$ docker build .

Command "build" not available in current context (ecs), you can use the "default" context to run this command

In the first example we tried to launch an NGINX container using docker run on ECS, and this failed. The error message says run is not available in the ECS context. Then we try to build an image using docker build, and again we're told the build command is not available in the ECS context.

If you've used Docker Swarm you might recognize part of what's going on. With Swarm we are recommended to not perform docker build on the Swarm host, because the Swarm host is meant to solely host Docker containers. Likewise, ECS is not meant for building container images, but for hosting containers.

The rationale for refusing docker run is a little different. As we'll see in a minute, the paradigm is that Docker automatically converts a Docker Compose file into an AWS CloudFormation stack. Since docker run is not a Compose file, there isn't the opportunity to do that conversion.

Instead, the only way to host a Container on ECS using an ECS Context is by using a Compose file.

Let's start with an ultra simple Compose file:

version: '3.8'

services:

nginx:

container_name: nginx

image: nginx

ports:

- '80:80'

There's nothing to this, it's the NGINX container image, the default configuration, with port 80 (HTTP) published to the public. The default configuration helpfully has a sample website giving us a quick way to verify whether this works.

The promise is that our future using Compose on AWS ECS is going to be -- well -- it can become complex because it's AWS, but it's going to be close to being this easy.

To deploy this on your laptop: docker --context default compose up. That should work, but I get an error saying this command isn't available in the default context. Instead, docker-compose --context default up does work. It's possible that since this is still an early release that there are rough edges.

This does demonstrate that we can use the --context option to run a command against a specific Docker Context.

In any case, we're here to experience deploying this to ECS, so let's get on with that:

$ docker compose up

[+] Running 13/13

⠿ nginxcomposesimple CREATE_COMPLETE 202.0s

⠿ Cluster CREATE_COMPLETE 5.0s

⠿ LogGroup CREATE_COMPLETE 1.0s

⠿ NginxcomposesimpleDefaultNetwork CREATE_COMPLETE 6.0s

⠿ NginxTCP80TargetGroup CREATE_COMPLETE 1.0s

⠿ CloudMap CREATE_COMPLETE 47.0s

⠿ NginxTaskExecutionRole CREATE_COMPLETE 16.0s

⠿ NginxcomposesimpleDefaultNetworkIngress CREATE_COMPLETE 0.0s

⠿ NginxcomposesimpleLoadBalancer CREATE_COMPLETE 122.5s

⠿ NginxTaskDefinition CREATE_COMPLETE 3.0s

⠿ NginxServiceDiscoveryEntry CREATE_COMPLETE 2.0s

⠿ NginxTCP80Listener CREATE_COMPLETE 1.0s

⠿ NginxService CREATE_COMPLETE 62.0s

It might not be so obvious, but what's happened is the ECS driver for Docker Compose has converted the Compose file into a CloudFormation stack. It built for us an ECS Cluster, AWS Log Group, a Load Balancer with a Target Group for port 80, a TaskDefinition matching the Compose file, and more. If you're familiar with AWS services, this will look familiar.

We were not shown the domain name of the resulting service. But we can run this:

$ docker ps

Command "ps" not available in current context (ecs)

$ docker compose ps

ID NAME REPLICAS PORTS

nginxcomposesimple-NginxService-1B2ZOW1QAYBK1 nginx 1/1 NginxcomposesimpleLoadBalancer-739819343.us-west-2.elb.amazonaws.com:80->80/http

We learn that ps is not available from the ECS context, but we can run docker compose ps.

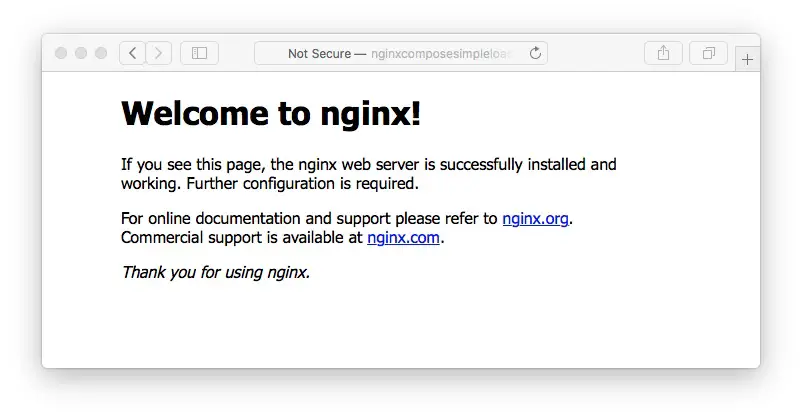

In the PORTS column, we're shown the domain name of the load balancer. Copy and paste that into a browser and you'll see this:

And, we have EASILY hosted a container on AWS ECS.

To inspect the CloudFormation stack run: docker compose convert | less. That command shows you how ECS will convert your Compose file to run on ECS. If you prefer to work with the CloudFormation stack yourself, feel free to modify the file ECS generates for you.

As cool as this is, there are some limitations. Compare the long list of commands available on the docker-compose command, with this:

$ docker compose --help

Docker Compose

Usage:

docker compose [command]

Available Commands:

convert Converts the compose file to a cloud format (default: cloudformation)

down

logs

ps

up

That's a great starting point, but there's a bunch of features we need. For example we cannot launch a shell environment in the deployed container:

$ docker exec -it nginx bash

Command "exec" not available in current context (ecs)

The docker-compose command has an exec command, but it is not implemented in docker compose. There are so many other missing commands, that we have to remember this is still an early release available only on the bleeding edge channel.

Once we're done basking in the glory of a simple Docker Compose deployment to ECS, let's see how the docker compose down command works:

$ docker compose down

[+] Running 13/13

⠿ nginxcomposesimple DELETE_COMPLETE 446.0s

⠿ Cluster DELETE_COMPLETE 398.0s

⠿ LogGroup DELETE_COMPLETE 400.0s

⠿ NginxcomposesimpleDefaultNetwork DELETE_COMPLETE 414.0s

⠿ NginxTCP80TargetGroup DELETE_COMPLETE 398.0s

⠿ CloudMap DELETE_COMPLETE 445.0s

⠿ NginxTaskExecutionRole DELETE_COMPLETE 401.0s

⠿ NginxcomposesimpleDefaultNetworkIngress DELETE_COMPLETE 2.0s

⠿ NginxcomposesimpleLoadBalancer DELETE_COMPLETE 398.0s

⠿ NginxTaskDefinition DELETE_COMPLETE 398.0s

⠿ NginxServiceDiscoveryEntry DELETE_COMPLETE 398.0s

⠿ NginxTCP80Listener DELETE_COMPLETE 397.0s

⠿ NginxService DELETE_COMPLETE 396.0s

That takes apart the CloudFormation stack, and if you revisit the URL you got earlier the service no longer responds.

In this section we've touched on how to deploy a trivial Docker Compose stack to AWS ECS. Previously this was a difficult task to learn. For example, earlier this year I did a deep dive into this topic, resulting in an earlier tutorial on Docker deployment to ECS, another tutorial on using Terraform for Docker deployment to ECS, ultimately resulting in

a chapter on Docker deployment with Terraform in the Fifth Edition of Node.js Web Development. It took 3-4 weeks to learn and master everything well enough to write those things. AWS has a lot of complexity and often the products are so complex you get the impression that they don't care about the pain we have to endure to use AWS. But this level of simplicity demonstrates that AWS is perhaps changing its tune.

Since this was such a trivial example, we need a more concrete one to work with.

Node.js app

To do something a little more complex, let's deploy a simple Node.js application that requires a database. The app is one I created a few days ago Single page multi-user application with Express, Bootstrap v5, Socket.IO, Sequelize, which is a fairly simple Express-based web application. The source code for the application is on Github at

https://github.com/robogeek/nodejs-express-todo-demo. For this demo consult the

first-release-dockerize branch.

We'll not discuss the full application, so if you're interested please read the four-part series that goes over how it works. In this posting we'll discuss the differences required to support deployment to AWS ECS using Docker Compose.

The first thing is of course creating a Dockerfile to describe the container image for the application.

FROM node:14.8

# Replicate the configuration present in package.json

ENV PORT="80"

ENV SEQUELIZE_CONNECT="models/sequelize-sqlite.yaml"

RUN apt-get update -y \

&& apt-get -y install curl python build-essential git \

apt-utils ca-certificates sqlite3

RUN mkdir /app /app/models /app/public /app/routes /app/views

COPY models/ /app/models/

COPY public/ /app/public/

COPY routes/ /app/routes/

COPY views/ /app/views/

COPY *.mjs package.json /app/

WORKDIR /app

RUN npm install --unsafe-perm

CMD [ "node", "./app.mjs" ]

Because the application uses a feature delivered in Node.js 14.8, the FROM line explicitly references that version. Otherwise this is a fairly normal Docker container for Node.js applications.

In it, we set up a directory /app to contain the application code. In that directory we run npm install to install required packages. The last line runs the application server.

This application is written to use a simple YAML file to describe the database connection. We're using this config file:

dbname: todo

username:

password:

params:

dialect: sqlite

storage: todo.sqlite3

This says to use the SQLite3 SQL database engine, and to store the database in a file named todo.sqlite3. This is not a best practice since the database is stored inside the running container and will vaporize the moment we delete the container. But, good news is that configuring it to use a different database is a matter of changing the configuration file. We'll take care of this a little bit later.

The next task is to create the docker-compose.yml file:

version: '3.8'

services:

todo:

image: robogeek/todo-app

container_name: todo-app

ports:

- "80:80"

This is similar to our NGINX example earlier. The difference is we're using an image from Docker Hub, and we haven't shown how the application gets to Docker Hub.

In the package.json we added these command scripts:

"docker-build": "docker --context default build -t todo-app .",

"docker-run": "docker --context default run --name todo-app -p 4000:80 todo-app",

"docker-tag": "docker --context default tag todo-app robogeek/todo-app:latest",

"docker-push": "docker --context default push robogeek/todo-app"

The docker-build script handles building the image. Note that we're using --context default on this and every other command. These commands must be run on the default context, and are not supported in the ECS context.

The docker-run command allows us to test the container on our laptop before deploying it to Docker Hub.

The docker-tag and docker-push are required for pushing the image to Docker Hub.

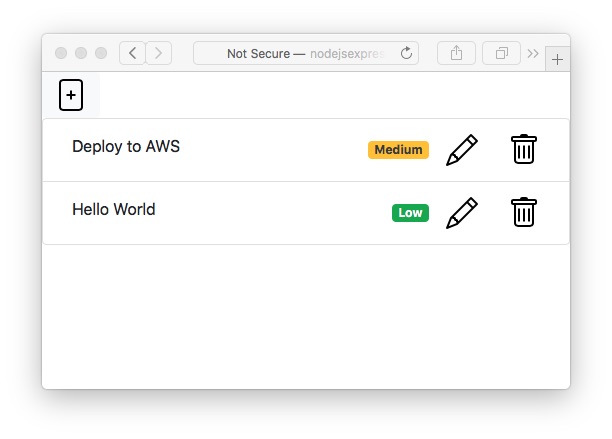

Once we've run those commands, we can deploy the Compose file. To do so we run docker compose up exactly as before, and the output looks very similar. Once it is deployed, run docker compose ps to find the URL for the load balancer. Once you do, you'll see this:

In this application, the "+" icon at the top lets you add a TODO item. The Pencil icon lets you modify one, and the Trash Can icon lets you delete one.

But, as we said, there is no way to save these TODO items because as it stands the database is within the container. As soon as we delete or update the container the database disappears.

A corollary problem is that the Docker with ECS service does not currently support mounting volumes. One solution would be to mount an AWS EFS volume into the container, and store the database on that volume. But that's not possible currently. The Docker and AWS teams have promised volume support soon.

Fortunately there is another way to implement data persistence. Among the AWS services is the Relational Database Service (RDS), which is a hosted SQL database. Let's discuss how to configure an RDS instance to use with the Todo application.

The application is written so we can reconfigure the database connection as needed. For example bundled in the container is another configuration file for a MySQL server:

dbname: todo

username: todo

password: todo12345

params:

host: localhost

port: 3306

dialect: mysql

It would be easy to add a MySQL container to the Docker Compose file. But that would run into the same problem, since the MySQL database would also vaporize as soon as its container is deleted.

To instead use AWS RDS database, add a few environment variables to change the configuration file of the Todo application. With the hosted RDS database we get a host name, user name, and password for the database. Clearly we could construct a new configuration file with those credentials. But we also wrote the application so that every field of this file can be overridden using environment variables. Therefore, the Docker Compose file could have an environment section containing these settings:

version: '3.8'

services:

todo:

...

environment:

SEQUELIZE_CONNECT: "models/sequelize-mysql-docker.yaml"

SEQUELIZE_DBUSER: "... USER NAME ..."

SEQUELIZE_DBPASSWD: "... PASSWORD ..."

SEQUELIZE_DBHOST: "... DB HOST NAME ..."

Once that's done, the TODO data will be stored in a persistent database.

Summary

This new ECS context for Docker is a very cool new feature. The Docker and AWS teams should be applauded for having the vision to decrease the complexity of using AWS.