; Date: Sat Mar 31 2018

Tags: Docker »»»» Docker MAMP »»»»

Modern development environments require a continuous integration system, along with a reasonable git-based repository hosting service. It's possible to rent these services, Github and Gitlab are both excellent hosted git repository services for example, and there are several hosted continuous integration systems. Gitlab in particular is a one-stop-shop offering both Git hosting and continuous integration in one service. But, you can easily host Git and Continuous Integration services on your own hardware. And with a little work the services can be HTTPS-protected using Lets Encrypt.

The generalized goal is to solve these needs:

- Github-like service on your own hardware

- Supporting personal or team-wide source repositories and project management

- Continuous Integration on your own hardware

- Possibly several other services - like a Wiki for team notes

- Possibly shared out to the Internet for remote access

In my case, this is for my own needs - I have several repositories for websites I've built using

AkashaCMS, plus some repositories for clients. I need both Git hosting and a continuous integration system. For example, when I'm finished writing this blog post I'll push the

techsparx.com repository to the Git server (Gogs), then a continuous integration system (Jenkins) notices the repository has changed, and a job will automatically kick off to build the site, and then automatically deploy to the server. My involvement is writing the content then pushing to the repository, and an automated system takes care of the rest.

The whole system runs on an Intel NUC my desk. For an overview of the NUC: Intel NUC's perfectly supplant Apple's Mac Mini as a lightweight desktop computer

Architecture

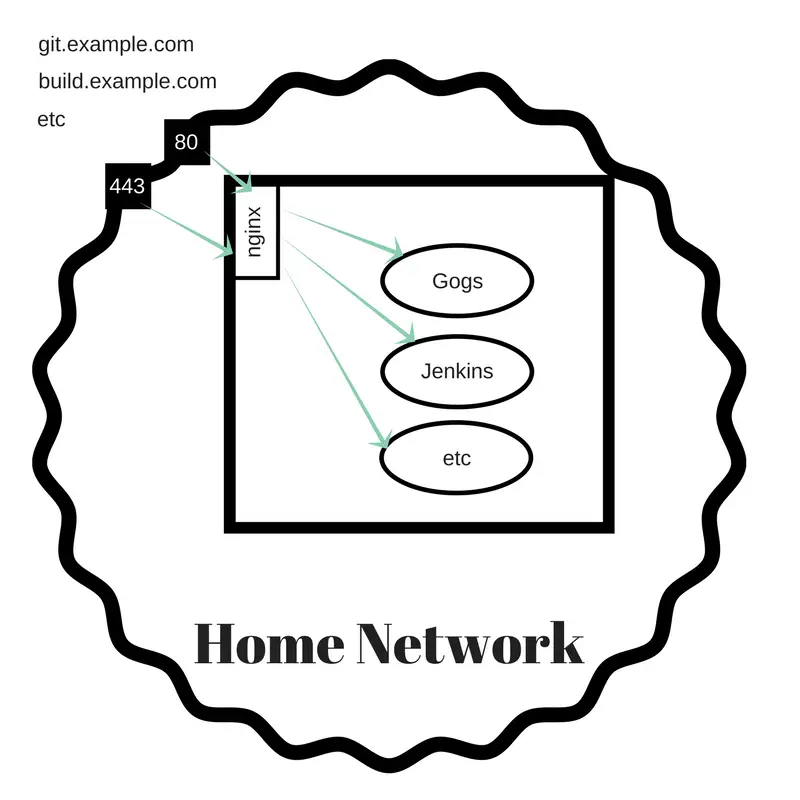

I recently bought a new NUC and want to move the services to the new NUC. And, I developed the idea to expose the services publicly using a custom domain for each service. My home Internet service is with Sonic DSL and one feature is that I have a fixed IP address making it easy to host a service at home. What I'm doing is configuring subdomains of a domain such that I'll have:

git.example.com- Git repository servicebuild.example.com- Build servicecloud.example.com- Owncloud/Nextcloudplex.example.com- Plex

The DSL router can route ports to internal servers - so ports 80 (HTTP) and 443 (HTTPS) can be routed to the new NUC. The NUC must then arrange so that each subdomain is handled by the corresponding service.

The method for this is to use HTTPS Proxy servers in nginx and to use Lets Encrypt to get the SSL certificates.

All these services will be installed on the same NUC. But because it's using Proxy server declarations, this could grow to several NUC's if desired.

Software choice

In case it's not clear - I chose Gogs for the Git repository service, and Jenkins for the continuous integration service.

There are several Github alternatives, see: These github alternatives give you powerful git hosting, on your own terms

Why Gogs? It's a lightweight piece of software -- it can even run on a Raspberry Pi -- that is easy to setup and administer. I spent a half a day trying to get Gitlab to work, the official Gitlab image was obtuse enough I couldn't get it to do anything, and while the leading 3rd party Gitlab image was easy to get running, it was not possible to connect a Gitlab runner to it (the Runner failed to retrieve the repository due to a bad repository URL). I wanted to use Gitlab because it is so much more comprehensive and feature-filled. But I couldn't get it to work, and I didn't have much time for the project. So -- Gogs is something I've run here at home for a couple years, I know this software, had already set it up once on Docker, I already had a directory of Gogs config data and repositories, so it was a no-brainer.

Why Jenkins? There are plenty of build systems out there. I wanted to use Gitlab, but that failed. I learned of

Drone which bills itself as a Docker-centric continuous integration system. It looks cool and right at what I needed, but it wasn't so easy to figure out how to get it going. Again, I already had a Jenkins setup and it was a known quantity making it an easy choice. If I had a more complex environment, I woulda spent time learning to use/setup Drone.

Gogs setup

I started this project with standing up the services without worrying about the nginx front end.

Make a directory named buildnet, that is meant to contain all services for Git repository and build services. We'll start by defining Gogs in this docker-compose.yml file:

version: '3.5'

services:

gogs:

image: gogs/gogs

container_name: gogs

restart: always

networks:

- buildnet

ports:

- '10022:10022'

- '3080:3080'

volumes:

- './gogs-data:/data'

- './gogs-app.ini:/data/gogs/conf/app.ini'

networks:

buildnet:

name: buildnet

driver: bridge

The Docker network, buildnet, is built by this Compose file. We specify a name parameter (a feature requiring Compose version 3.5) because otherwise the generated networkname is is something like buildnet_buildnet_1. The Compose file that launches nginx needs to refer to this network, and therefore the network name must be controlled, and therefore we must specify the network name here.

It's fairly easy to get Gogs running. It does require a configuration file --

see documentation -- and there are a few interesting settings to make.

[server]

DOMAIN = git.example.com

HTTP_PORT = 3080

ROOT_URL = http://git.example.com/

DISABLE_SSH = false

START_SSH_SERVER = true

SSH_DOMAIN = git.example.com

SSH_PORT = 10022

OFFLINE_MODE = false

This sets Gogs to look for the given domain name. Internally it will appear on port 3080, so we'll have to configure nginx to proxy to that port. Gogs includes a bundled SSH service, and we expose it at port 10022 so it doesn't interfere with the standard SSH service on port 22.

While the service is internally at port 3080 we want a simple URL shown to the public. The ROOT_URL variable contains the URL shown to the public. In the diagram earlier, it is nginx which will make Gogs visible on the URL defined in ROOT_URL.

Running Gogs is then a matter of this:

$ docker-compose build

$ docker-compose up

This runs the Gogs service in the foreground. That's useful for debugging while getting everything correct. Once you're satisfied the Gogs service can be run in the background by adding the -d option.

Updating the Gogs service is a matter of

$ docker-compose stop

$ docker-compose pull gogs/gogs

$ docker-compose build

$ docker-compose up -d

Jenkins continuous integration service

I wanted to have everything managed by Docker. Unfortunately the Jenkins Docker container is not so useful. First it was difficult getting the initial configuration to happen. Then, once I managed to get it running, the system configuration area showed several errors. Finally, it was impossible to add build jobs, because the screen was simply blank -- see the errors in the system configuration, and think "oooh, there's something seriously wrong here".

On my other NUC, I used the traditional Ubuntu installation instructions at

https://jenkins.io/doc/book/installing/#debian-ubuntu

This is the typical apt-get update then apt-get install jenkins and then you do some configuration. In /etc/default/jenkins I added HTTP_PORT=49001 to get it visible on port 49001.

Then you manage the process using systemctl like:

# systemctl restart jenkins.service

Kicking the tires

At this point you have a Git repository service, and a continuous integration system.

You should be able to load a few git repositories into Gogs pretty easily.

At the moment the natural domain name for GIT URL's is not the plain simple URL implied by the configuration file snippet shown earlier. Instead the Gogs server is at port 3080 on the computer you're using, and the best git URL to use is ssh://git@nuc2.local:10022/david/techsparx.com.git ... nuc2.local is the hostname of the NUC I'm using. Or, via HTTP it would be http://nuc2.local:3080/david/techsparx.com.git, to account for the local domain name and the port number on the local network.

Inside a Jenkins job, you'll configure that local repository URL -- in the Source Code Management section, click the Git choice, then enter the URL.

You'll need to setup an SSH key for the ssh:// URL to work. Jenkins executes the corresponding commands as the jenkins user ID, so therefore you must do this:

$ sudo su -

# su - jenkins

$ ssh-keygen

.. much output

$ pwd

/var/lib/jenkins

$ ls -al .ssh

total 28

drwx------ 2 jenkins jenkins 4096 Mar 30 17:06 .

drwxr-xr-x 15 jenkins jenkins 4096 Mar 30 18:02 ..

-rw------- 1 jenkins jenkins 1675 Mar 30 17:04 id_rsa

-rw-r--r-- 1 jenkins jenkins 394 Mar 30 17:04 id_rsa.pub

-rw-r--r-- 1 jenkins jenkins 8398 Mar 31 12:33 known_hosts

This starts a shell equivalent to if you'd logged in as jenkins, and therefore the commands you run have $HOME as /var/lib/jenkins and all the other attributes of being logged in as jenkins.

The result is a public SSH key to add to the Gogs server. In Jenkins, go to the Credentials section to setup this SSH key.

The last thing is while you're logged-in as jenkins is to SSH into any servers that Jenkins must access. During deployment you might be using rsync to push files to a server, and therefore will need to use passwordless SSH credentials. That means copying the id_rsa.pub file to the authorized_keys file on all such servers, and then doing this:

$ ssh -l USERNAME SERVER.DOMAIN.COM

The authenticity of host 'SERVER.DOMAIN.COM (###.###.###.###)' can't be established.

ECDSA key fingerprint is SHA256:rWqwh967MZCQNhaBNBJ6bFWInpLjUkk1l+LW2VZD1+E.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'SERVER.DOMAIN.COM,###.###.###.###' (ECDSA) to the list of known hosts.

$

That is - logging in like this adds that server to the known_hosts file, so that when a Jenkins job tries to do the same it can succeed because there will be no user interaction.

Configuring HTTP-only nginx

Let's start setting up nginx, starting with a simple HTTP proxy. We currently have two services to expose to the world, Gogs and Jenkins. But we know down the road there will be other services to expose. The nginx setup will focus on proxy server objects - Gogs will be one proxy server, Jenkins another, and so on.

Make a directory - nginx - that will contain the setup, with this docker-compose.yml:

version: '3'

services:

nginx:

# build: ./nginx-lets-encrypt

image: nginx:stable

container_name: nginx

networks:

- buildnet

volumes:

- ./nginx-lets-encrypt/nginx.conf:/etc/nginx/nginx.conf

# - ./lets-encrypt-data/:/etc/letsencrypt/

# - /home/docker/jenkins/workspace/techsparx.com/out/:/webroots/techsparx.com:ro

ports:

- '80:80'

- '443:443'

networks:

buildnet:

external: true

When we get to using Lets Encrypt, we'll need further configuration. We're exposing ports 80 (HTTP) and 443 (HTTPS) to the world, and we're attaching it to buildnet. Because of external:true it's not creating a new buildnet but using the buildnet defined in the previous Compose file.

Create a directory nginx/nginx-lets-encrypt and a file nginx.conf containing this:

user nginx nginx;

worker_processes 4;

error_log /var/log/nginx-error.log debug;

pid /var/run/nginx.pid;

events {

worker_connections 1024;

}

http {

include /etc/nginx/mime.types;

default_type application/octet-stream;

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /var/log/nginx-access.log main;

sendfile on;

#tcp_nopush on;

keepalive_timeout 65;

server {

listen 80;

server_name git.example.com www.git.example.com;

access_log /var/log/git.example.access.log main;

error_log /var/log/git.example.error.log debug;

# location /.well-known/ {

# root /webroots/git.example.com/;

# }

location / {

proxy_pass http://gogs:3080/;

}

}

server {

listen 80;

server_name build.example.com www.build.example.com;

access_log /var/log/build.example.access.log main;

error_log /var/log/build.example.error.log debug;

# location /.well-known/ {

# root /webroots/build.example.com/;

# }

location / {

proxy_pass http://nuc2.local:49001/;

}

}

}

This configuration is adapted from here:

https://www.nginx.com/resources/wiki/start/topics/examples/full/

It sets up two proxy servers for git and build subdomains. Each is listening to port 80 only, and is proxying HTTP to the corresponding service set up earlier. There's a place-holder to handle the .well-known directory that will be used when we get to Let's Encrypt.

Because the nginx container is connected to buildnet it can directly reach the gogs container using that hostname via buildnet. But, jenkins is not on buildnet and therefore must be reached using the doamin name of the computer.

After running docker-compose up you'll see whether the configuration file is correct, but you cannot (yet) visit using the domain names.

The next step is configuring domain names to point at this server. I'm using subdomains of a domain for one of my websites. Each subdomain was configured with an A record for my home DSL connection -- which might look like this:

git.example.com. 14400 IN A 123.123.123.123

build.example.com. 14400 IN A 123.123.123.123

Then in the DSL router, I set up port forwarding such that ports 80 and 443 on the public side of the router is forwarded to ports 80 and 443 on the server. While here, it is useful to set up a port forwarding for port 22 (regular SSH) and port 10022 (Gogs SSH) so that these ports can be externally accessed.

With all that setup, you can now visit http://git.example.com or http://build.example.com to verify the nginx server is setup correctly.

You should be able to login to both services, verify that Gogs is exposing the correct URL's, that you can git clone with repository URL's, and that Jenkins is still able to run build jobs.

Adding certbot to nginx Dockerfile

Now we need to setup certbot so we can get SSL certificates from Lets Encrypt. We'll adapt a technique shown elsewhere: Deploying an Express app with HTTPS support in Docker using Lets Encrypt SSL

First, modify nginx/docker-compose.yml to this:

version: '3'

services:

nginx:

build: ./nginx-lets-encrypt

container_name: nginx

networks:

- buildnet

volumes:

- ./nginx-lets-encrypt/nginx.conf:/etc/nginx/nginx.conf

- ./lets-encrypt-data/:/etc/letsencrypt/

# - /home/docker/jenkins/workspace/techsparx.com/out/:/webroots/techsparx.com:ro

ports:

- '80:80'

- '443:443'

networks:

buildnet:

external: true

The main change is that we'll need to build a customized nginx-based container that incorporates the certbot tool along with a cron daemon.

In nginx/nginx-lets-encrypt create Dockerfile containing:

FROM nginx:stable

# Inspiration:

# https://hub.docker.com/r/gaafar/cron/

# Install cron, certbot, bash, plus any other dependencies

RUN apt-get update \

&& apt-get install -y cron bash wget

RUN mkdir -p /webroots/ /scripts

# /webroots/DOMAIN.TLD/.well-known/... files go here

VOLUME /webroots

VOLUME /etc/letsencrypt

# /webroots/DOMAIN.TLD will be mounted

# into each proxy as http://DOMAIN.TLD/.well-known

#

# /scripts will contain certbot and other scripts

COPY register /scripts/

RUN chmod +x /scripts/register

WORKDIR /scripts

RUN wget https://dl.eff.org/certbot-auto

RUN chmod a+x ./certbot-auto

# Run certbot-auto so that it installs itself

RUN /scripts/certbot-auto -n certificates

# This installs a Crontab entry which

# runs "certbot renew" on several days a week at 03:22 AM

#

RUN echo "22 03 * * 2,4,6,7 root /scripts/certbot-auto renew" >/etc/cron.d/certbot

# Run both nginx and cron together

CMD [ "sh", "-c", "nginx && cron -f" ]

The idea is to support two functionalities:

- Registering domains with the Lets Encrypt service

- Automatically renew the SSL certificates for each domain

We've installed cron so that an occasional script is run to manage renewal of Lets Encrypt SSL certificates. The cron job is defined towards the bottom -- this methodology is compatible with the Debian setup for cron, other Linux distros may set up cron differently.

The CMD line was arrived at after much experimentation. We need to start both services while keeping a service in the foreground so that Docker knows to keep the container alive. By default nginx will spin itself into the background, while cron -f does not. We could have reversed these two, cron with no options automatically spins into the background, and then nginx -g daemon off should have kept nginx in the foreground but I had a difficult time getting the syntax for that correct.

In the middle we install the certbot tool. In the documentation there are several ways described to install certbot, and they're said to be interchangeable. The certbot-auto -n certificates command is run simply for the side-effect of it installing the dependencies required to run certbot-auto.

To register a domain with Lets Encrypt we need a method to validate domain ownership. Certbot does this by installing a magic file so it can be retrieved at http://DOMAIN.TLD/.well-known/acme-challenge. To support this we'll create a /webroots directory that will be mounted into the nginx service for each proxy'd domain.

The /etc/letsencrypt directory is where certbot keeps Lets Encrypt data files. We need this as an external volume so its contents can live on even if the container is destroyed and recreated.

To simplify registering with Lets Encrypt, create a script named register containing:

#!/bin/sh

mkdir -p /webroots/$1/.well-known/acme-challenge

/scripts/certbot-auto certonly --webroot -w /webroots/$1 -d $1

This takes a domain name on the command-line, creates the .well-known directory, then runs certbot-auto to register the domain.

In nginx.conf we can now make this change:

server {

listen 80;

server_name git.example.com www.git.example.com;

access_log /var/log/git.example.access.log main;

error_log /var/log/git.example.error.log debug;

location /.well-known/ {

root /webroots/git.example.com/;

}

location / {

proxy_pass http://gogs:3080/;

}

}

That is, the nginx container has the /webroots directory which will be holding the .well-known files. This makes those files publicly visible.

Recreate the container as so:

$ docker-compose stop

$ docker-compose build

$ docker-compose up

We should now be able to run /scripts/register

$ docker exec -it nginx bash

root@46415222eb08:/scripts# ./register git.example.com

... much output

You'll be taken through a dialog asking a few questions, including your e-mail address. Success is indicated by:

IMPORTANT NOTES:

- Congratulations! Your certificate and chain have been saved at:

/etc/letsencrypt/live/git.example.com/fullchain.pem

Your key file has been saved at:

/etc/letsencrypt/live/git.example.com/privkey.pem

Your cert will expire on 2018-06-30. To obtain a new or tweaked

version of this certificate in the future, simply run certbot-auto

again. To non-interactively renew *all* of your certificates, run

"certbot-auto renew"

- If you like Certbot, please consider supporting our work by:

Donating to ISRG / Let's Encrypt: https://letsencrypt.org/donate

Donating to EFF: https://eff.org/donate-le

And there will be files in the /etc/letsencrypt directory:

root@46415222eb08:/scripts# ls /etc/letsencrypt/

accounts archive csr keys live renewal renewal-hooks

root@46415222eb08:/scripts# ls /etc/letsencrypt/live

build.example.com git.example.com

root@46415222eb08:/scripts# ls /etc/letsencrypt/live/git.example.com/

README cert.pem chain.pem fullchain.pem privkey.pem

We can check the certificates:

root@46415222eb08:/scripts# ./certbot-auto certificates

-------------------------------------------------------------------------------

Found the following certs:

Certificate Name: build.example.com

Domains: build.example.com

Expiry Date: 2018-06-30 01:52:35+00:00 (VALID: 89 days)

Certificate Path: /etc/letsencrypt/live/build.example.com/fullchain.pem

Private Key Path: /etc/letsencrypt/live/build.example.com/privkey.pem

Certificate Name: git.example.com

Domains: git.example.com

Expiry Date: 2018-06-29 23:48:17+00:00 (VALID: 89 days)

Certificate Path: /etc/letsencrypt/live/git.example.com/fullchain.pem

Private Key Path: /etc/letsencrypt/live/git.example.com/privkey.pem

-------------------------------------------------------------------------------

And we can try a renewal:

root@46415222eb08:/scripts# ./certbot-auto renew

-------------------------------------------------------------------------------

Processing /etc/letsencrypt/renewal/build.example.com.conf

-------------------------------------------------------------------------------

Cert not yet due for renewal

-------------------------------------------------------------------------------

Processing /etc/letsencrypt/renewal/git.example.com.conf

-------------------------------------------------------------------------------

Cert not yet due for renewal

-------------------------------------------------------------------------------

The following certs are not due for renewal yet:

/etc/letsencrypt/live/build.example.com/fullchain.pem expires on 2018-06-30 (skipped)

/etc/letsencrypt/live/git.example.com/fullchain.pem expires on 2018-06-29 (skipped)

No renewals were attempted.

-------------------------------------------------------------------------------

While the SSL keys are there, we haven't set them up in the nginx configuration.

Integrating certbot registrations and nginx HTTPS proxies

We're getting to the end of this journey - promise. The last step is to reconfigure nginx to support HTTPS proxying, and to automatically redirect HTTP to HTTPS.

This requires a two-proxy solution, one to handle port 80 (HTTP), redirecting anything arriving on port 80 to the HTTPS port, and the other handling the HTTPS port.

For each service you're proxying set up this pair of server definitions:

# HTTP — redirect all traffic to HTTPS

server {

listen 80;

# listen [::]:80 default_server ipv6only=on;

server_name git.example.com www.git.example.com;

access_log /var/log/git.example.access.log main;

error_log /var/log/git.example.error.log debug;

location /.well-known/ {

root /webroots/git.example.com/;

}

return 301 https://$host$request_uri;

}

server { # simple reverse-proxy

# Enable HTTP/2

listen 443 ssl http2;

listen [::]:443 ssl http2;

server_name git.example.com www.git.example.com;

access_log /var/log/git.example.access.log main;

error_log /var/log/git.example.error.log debug;

# Use the Let’s Encrypt certificates

ssl_certificate /etc/letsencrypt/live/git.example.com/fullchain.pem;

ssl_certificate_key /etc/letsencrypt/live/git.example.com/privkey.pem;

location /.well-known/ {

root /webroots/git.example.com/;

}

location / {

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-NginX-Proxy true;

proxy_pass http://gogs:3080/;

proxy_ssl_session_reuse off;

proxy_set_header Host $http_host;

proxy_cache_bypass $http_upgrade;

proxy_redirect off;

}

}

Towards the middle is a pair of ssl_certificate lines, and the file names must match with the ones downloaded by certbot.

The proxy_pass line must match what we'd used earlier for the HTTP-only proxying.

With success you can now visit the https:// version of your servers, and visiting the http:// version will automatically redirect to https://.

In the browser location bar, verify the green lock icon shows up and it says Secure. You should inspect the certificate using the web browser to ensure all is well.