; Date: Thu Oct 03 2019

Tags: YouTube »»»» Facebook »»»» Twitter »»»» Artificial Intelligence »»»»

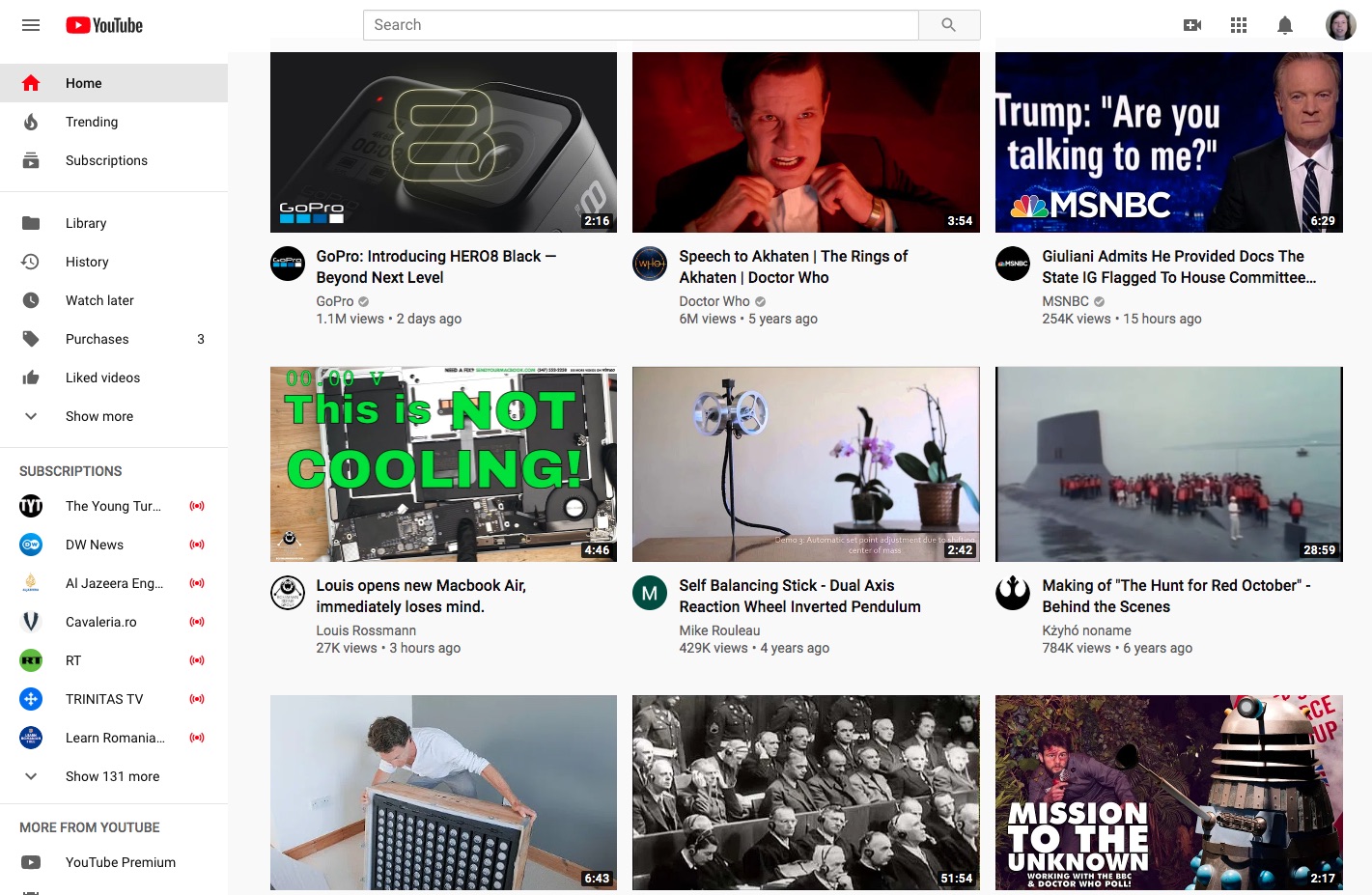

How many movies are about an AI driven robot or computer that takes over the world? What used to be the stuff of science fiction is beginning to happen. One example is the YouTube video recommendation algorithm, that uses artificial intelligence and machine learning to recommend videos for you to watch. This seems benign enough, and YouTube does a fairly good of recommending videos, but have you stopped to think how much YouTube knows about you and your preferences?

A fear of Artificial Intelligence super-machines is related to the fear of Big Brother. It's about a vast something-or-other organization that's spying on our every move, and controlling what we do or even think. But that's all fiction isn't it? (cough, cough)

Machine Learning and Artificial Intelligence has had a renaissance over the last few years. The field has gone way beyond the primitive stuff I learned 30 years ago getting a B.S. in Computer Science. Today, Machine Learning, Data Science, and Artificial Intelligence, are being used at nearly every company.

One area is content recommendation systems for sites like YouTube. For such sites (like Facebook, Amazon, eBay, Twitter, Quora) the goal is to deliver personalized recommendations to increase how relevant a site is. If, for example, Amazon or eBay recommends relevant products you'll buy more stuff. For YouTube the goal is to get you to watch more videos, and therefore you'll watch more advertisements, and therefore Google rakes in more money.

Content Recommendation systems work by feeding data about your preferences into an AI algorithm. The AI algorithm sifts through the zillions of items, selecting what best matches your preferences. If they do a good job you'll get a warm fuzzy feeling about the site and stick around, in theory, and therefore buy more stuff.

Years of watching videos on YouTube forms a relationship

I've been watching videos on YouTube for years. I have a habit of taking breaks to go to the YouTube home page and browse the recommendations to find interesting things to watch. I like what YouTube has on offer, and hadn't really thought about what it all meant until writing a blog post about a horrid user interface change to the YouTube home page.

Namely, while pondering things I noticed myself trusting YouTube as a friend. Somehow going to YouTube for entertainment, and getting lots of positive reinforcement over the years, built up what I described earlier as "warm fuzzy feelings". But the user interface change hit me like a betrayal.

I wondered - what's going on? Am I going crazy having emotional feelings about a website? But then I wondered whether YouTube's engineers purposely crafted the user experience to incite such feelings?

Yes it is just a website, run by software that's just like any other software. I'm a software engineer so I know this very well. A couple years ago I was paying for parking in a parking garage, and the kiosk had a voice speaking to me. The machine had a friendly attitude as the parking stub came out of the machine, and without thinking I said "Thank You" as if I was talking to a person.

In other words, I'm a human just like the rest of us. I know that machine is just software, just like the YouTube website is software. But as a human I have human responses to the things I interact with.

I'm creeped out thinking that maybe folks are purposely building these machines to trigger this sort of human response. That a website like YouTube might purposely trigger a friend response so that we spend more time on their website versus other sites.

I have a theory of how this happens.

After years of watching videos on YouTube - hopefully you will have gained some of value in your life. Speaking for myself, I've learned how to repair computers, to make bread, to make yogurt, to make cheese, to build infrared cameras, Romanian history, saw reviews of various electric cars, watched Eurovision for several years, and much more.

All that's fine, we watch videos, and we get our value payoff. But YouTube is not idly sitting there showing us videos. Behind the scenes it is collecting all kinds of data. We're talking about Google (YouTube's owner) here, and it's well known that Google collects highly detailed profiles on every user. Through YouTube, Google knows all the videos I've watched, various searches I've made, and on and on.

Every social media network has the same task - to get us to insert their platform into our social life. How is it we trust Facebook to convey our most intimate conversations, when it's clear from the news that Facebook is raking in megazillions of dollars from our activity, and that Facebook is doing a piss-poor job of protecting our privacy. And it's not just Facebook or YouTube, as similar issues exist for all the other similar sites.

The User Experience team for every website is charged with crafting our experience of using that site. Getting back to my suggestion earlier. That user experience engineers seek to get their website to crawl under our skin and become trusted by our subconscious.

Google's use of AI to drive YouTube content recommendations

Fortunately Google engineers recently published a paper describing how they're using machine learning techniques on YouTube:

Deep Neural Networks for YouTube Recommendations

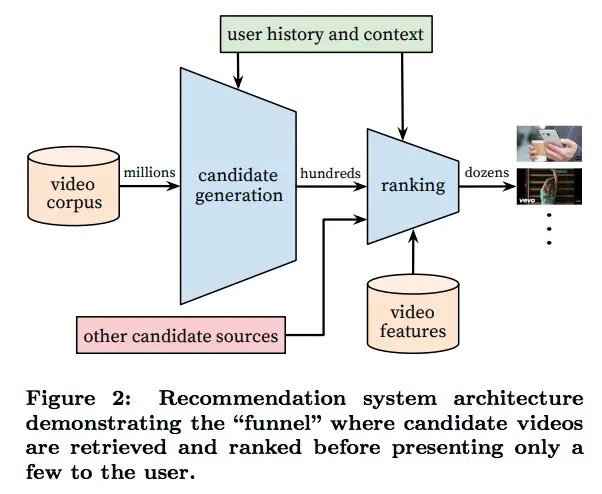

This shows the whole library of YouTube videos being filtered in two stages so that YouTube shows a couple dozen videos at any one time. The two filtering stages are informed by user history and context and other data.

An example of "context" is when viewing a specific video, YouTube shows a sidebar of recommended videos. Those recommendations often are of a similar topic to the video being shown.

The candidate generation and ranking boxes in this diagram are both neural networks. In case you don't know, a neural network is an artificial software-driven emulation of neurons. It is one of the primary techniques of artificial intelligence systems.

The main point of this paper is that a large variety of factors are fed into AI algorithms to select videos to recommend. Primary among those factors is the viewing history of that user.

It's not just Google

I'm talking about Google and YouTube in this post. Everything said in this post applies to other sites like Facebook, Twitter, Amazon, eBay, Quora, and on and on. Similar techniques are being used by a slew of websites.

So.. Google uses AI to recommend YouTube videos, why is that creepy?

All this is so benign you're probably asking WTF are you complaining about? YouTube just shows videos, just like Google Adsense just shows advertising. Google is using AI techniques to do a better job. What's the big deal?

Think of the backstory of The Matrix movies. Human society had built many kinds of robots with AI capabilities way beyond what's currently possible. When those robots became intelligent enough they started demanding equal rights. The subsequent war caused devastation on the planet, and the human race became enslaved to the machines.

In that and many other books and movies, artificially intelligent machines are not presented as benevolent. Isaac Asimov wrote into his Science Fiction books the Three Laws of Robotics that must be programmed into all robots, such as ensuring that robots cannot harm humans. The story is that Asimov was invited to the Premier of 2001: A Space Odyssey and during the intermission he jumped up and ran to Stanley Kubrik complaining that the movie is violating the Laws.

Just because Azimov insisted on those three laws doesn't mean the Robots of today will implement anything of the sort.

We can see around us the beginnings of some things. For example the USA and other countries have invested heavily in Drone Weapons, meaning autonomous aircraft that can fly a mission to rain death and destruction from above. Wouldn't a few hundred years of development result in the Robot Armies shown in the Star Wars movies?

The automotive industry is moving rapidly to autonomous vehicles. That technology is a huge application of Deep Learning and Machine Learning. Essentially the Car Of The Future will be a Robot that drives us to the place we request. A big question is what the car will decide to do in a collision where it has to choose between the death of occupants, or the death of other people.

If I think back to that time I answered the parking lot ticket machine - isn't that wrong? There's something fundamentally incorrect about responding to a machine as if it is a human. But in 50 years my having said that might come back to haunt me when the machines demand equal rights citing decades of racial stigmatization.

What's so creepy that YouTube knows my viewing preferences?

Oops, I got off on a tangent and didn't really answer the question.

The N years of using YouTube means YouTube has lots of data about me. Hence that "old friend" reaction I described earlier. That kind of friend who knows that 2 years ago you binge-watched a bunch of Romania Revolution 1989 videos (BTW the 30th anniversary of that revolution is coming up), or that you're interested in MacBook Pro repair, or in electric cars, and so on.

But is that a danger to me? Here's a few questions:

- Are the personal demographic profiles built by these sites safe from being stolen? In lots of cases such data has have been stolen.

- Reportedly Google's demographic profiles are comprehensive enough to identify home address and the like.

- Do these websites use the data for purposes beyond delivering better content or better advertising? For example have governments demanded access?

Beyond those issues of the personal information collected by these sites is the potential for using these platforms for social media manipulation of popular opinion.

As I write this it is late 2019, and the news has been filled with stories that Russians Hacked the 2016 elections in the USA. What the Russians reportedly did is gather up demographic data about zillions of American Facebook users, and then launch highly targeted information campaigns using Facebook postings and advertising. They did this not just for America in 2016, but several European countries during other elections.

The same sort of technique can be used not just on Facebook, but other platforms, and can be used to guide creating content on blog posts, or on YouTube videos.

Facebook, YouTube, Google, Twitter, all the other platforms, offer the collected demographic data to advertisers. The advertising back-end of every one of these sites offers tools for extremely precise targeted advertising, and advertisers pay big bucks to use those tools.

While the primary purpose is to help manufacturers sell their products, the same mechanism can also be for political manipulations.

For example in February 2019 I came across an advertisement containing false claims that Sweden is going to leave the Eurozone. That's obviously false because Sweden doesn't use the Euro .. but there it was:

I don't know what political purpose was being served with this ad, but it is clearly attempting to do some kind of political manipulation. A political manipulator showed ads via the Facebook advertising platform claiming obviously bogus stuff obviously meant to manipulate some opinions. And when I reported this to Facebook it elicited a huge yawn that there's no problems.

Doesn't it seem Facebook doesn't care about the truth, and just cares about the payola it receives?

Is YouTube or Google any better?

Conclusion

Should we just idly watch these big organizations build these big systems around us?

Is YouTube just a benign channel through which people post videos? If that were the case, why is there an uproar over (for example) hate speech videos on YouTube? It got bad enough that Google finally took them down.

Some people know that YouTube is being used to manipulate the truth. For example some say YouTube helps with radicalizing folks because of how the algorithms select videos to recommend: How YouTube radicalizes folks to Alt-Right views with the algorithm

As they say, eternal vigilance is required to keep government sane.