; Date: Sun Mar 22 2020

Tags: Node.JS »»»» Docker »»»»

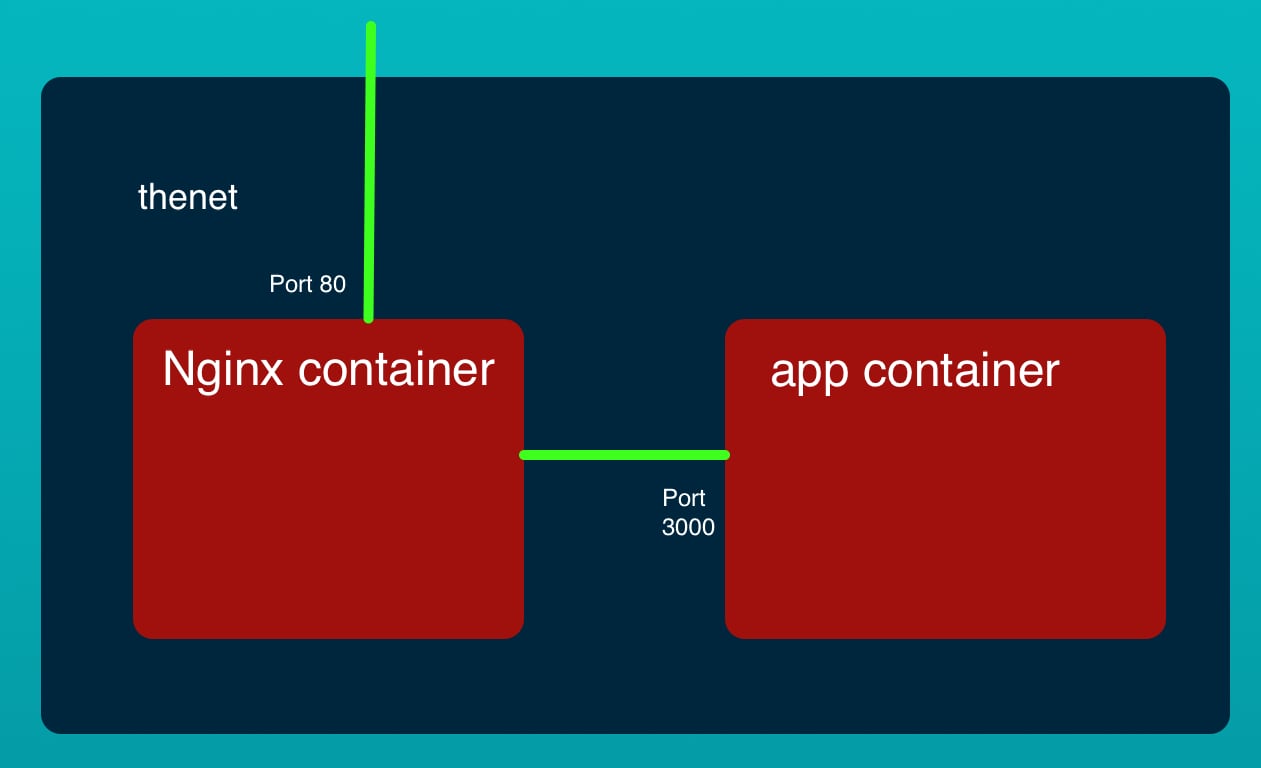

In a world of Microservices, our Docker deployments are probably multiple small containers each implementing several services, with an NGINX container interfacing with the web browsers. There are many reasons for doing this, but lets instead focus on the how do we set up NGINX and NodeJS in Docker question. It is relatively easy, and to explore this we'll set up a two-container system with an NGINX container and a simple NodeJS app standing in as a back-end service.

The components of this example are widely used in modern software engineering. NGINX is a lightweight high throughput web server. NodeJS is an application development platform for running JavaScript applications outside of web browsers. Docker is a system for containerizing software for deployment on systems. While the example application is trivial, it's structure is similar to real application deployments.

One modern paradigm, the micro-service architecture, has multiple back-end services each exposing REST API's, and a front end that glues those services together into a Web User Interface. Using Docker one could use something like NGINX as part of the front end, along with various containers running Node.js or other service applications.

The example application used in this tutorial is a highly simplified version of that architecture.

If you haven't already done so, install Docker on your laptop. For Windows or macOS, you need the Docker for Mac/Windows application installed which runs the Docker Engine inside a lightweight Linux environment. Otherwise on Linux you simply install Docker Engine.

-

Learn about installing Docker for Mac

-

Learn about Docker for Windows

-

For Linux you instead install the Docker Engine

The application to deploy

The code is meant to be an example of a multi-tier application stack. Standing in for a front-end is an NGINX container with a configuration containing a proxy to the back-end tier. Standing in for the back-end tier is a simple Node.js application. In a real production application both of these would of course be much more complex. The NodeJS app could of course make any kind of call to further back-end services like databases or other micro-services.

The source code is on Github at

robogeek / aws-ecs-nodejs-sample

The example repository has two directories - app containing the Node.js code and nginx containing the NGINX server configuration.

The Node.js application

In app we have a file, Dockerfile containing:

FROM node:13

COPY server.js /

EXPOSE 3000

CMD [ "node", "/server.js" ]

This declares the application container. It starts from a container for running Node.js applications, that has a pre-installed version of Node.js 13.x. The container setup is simple, we simply copy server.js to a location inside the container, and on the last line that server is executed.

Unlike most Node.js applications, this one is so simple it does not require extra packages. A more typical Node.js Dockerfile would use more source files, a package.json file, and would run npm install.

The EXPOSE command tells Docker that this container will provide a service on TCP port 3000.

The final line runs node /server.js which will conveniently run the server. We don't require any service management because Docker will automatically restart the container if it crashes.

In app we have a file, server.js containing:

const http = require('http');

const util = require('util');

const url = require('url');

const os = require('os');

const server = http.createServer();

server.on('request', (req, res) => {

console.log(req.url);

res.end(

`<html><head><title>Operating System Info</title></head>

<body><h1>Operating System Info</h1>

<table>

<tr><th>TMP Dir</th><td>${os.tmpdir()}</td></tr>

<tr><th>Host Name</th><td>${os.hostname()}</td></tr>

<tr><th>OS Type</th><td>${os.type()} ${os.platform()} ${os.arch()} ${os.release()}</td></tr>

<tr><th>Uptime</th><td>${os.uptime()} ${util.inspect(os.loadavg())}</td></tr>

<tr><th>Memory</th><td>total: ${os.totalmem()} free: ${os.freemem()}</td></tr>

<tr><th>CPU's</th><td><pre>${util.inspect(os.cpus())}</pre></td></tr>

<tr><th>Network</th><td><pre>${util.inspect(os.networkInterfaces())}</pre></td></tr>

</table>

</body></html>`);

});

server.listen(3000);

This is our very simple application. It is a trivial HTTP server that indeed listens on port 3000. For any request URL we will respond with some operating system information. Remember, we're keeping this simple.

The NGINX server configuration

Then in nginx we have a Dockerfile containing:

FROM nginx:stable

COPY nginx.conf /etc/nginx/nginx.conf

EXPOSE 80

We're running the nginx container with a custom configuration file. The NGINX container from which we derived this already contains a CMD instruction. All we need do is inject the custom configuration. Another way to have done this is with a volume mount in the docker run command or docker-compose.yml.

In nginx we have nginx.conf containing:

user nginx nginx;

worker_processes 4;

error_log /var/log/nginx-error.log debug;

pid /var/run/nginx.pid;

events {

worker_connections 1024;

}

http {

include /etc/nginx/mime.types;

default_type application/octet-stream;

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /var/log/nginx-access.log main;

sendfile on;

keepalive_timeout 65;

server { # simple reverse-proxy

listen 80;

location / {

proxy_pass http://app:3000/;

}

}

}

This sets up a simple HTTP server, that proxy's to a service at http://app:3000.

It's a matter of configuration to get the server in server.js to be attached to that URL.

Parent directory - Docker Compose file

In the directory above these two directories, we have docker-compose.yml containing:

version: '3'

services:

nginx:

build: ./nginx

container_name: nginx

networks:

- thenet

ports:

- '80:80'

app:

build: ./app

container_name: app

networks:

- thenet

ports:

- '3000:3000'

networks:

thenet:

This sets up the two container images attached to the same Docker bridge network. The nginx container is visible at port 80, while the app container is visible at port 3000.

Docker bridge networks are virtual network segments running inside the Docker host. It acts like a real network segment, but is completely virtualized inside the host. It is used for implementing service discovery between Docker containers, and it can provide encapsulation and security layers.

Due to how Docker bridge networks operate, the app container will have a domain name app.thenet and be reachable with the url http://app:3000. That's exactly what we put in the nginx.conf.

We can use docker-compose up to launch the containers. Then we can visit http://localhost in a web browser to view the application.

$ docker-compose up

Starting nginx ... done

Starting app ... done

Attaching to app, nginx

^CGracefully stopping... (press Ctrl+C again to force)

Stopping nginx ... done

Stopping app ...

In that mode we are attached to the containers and will see any logging output.

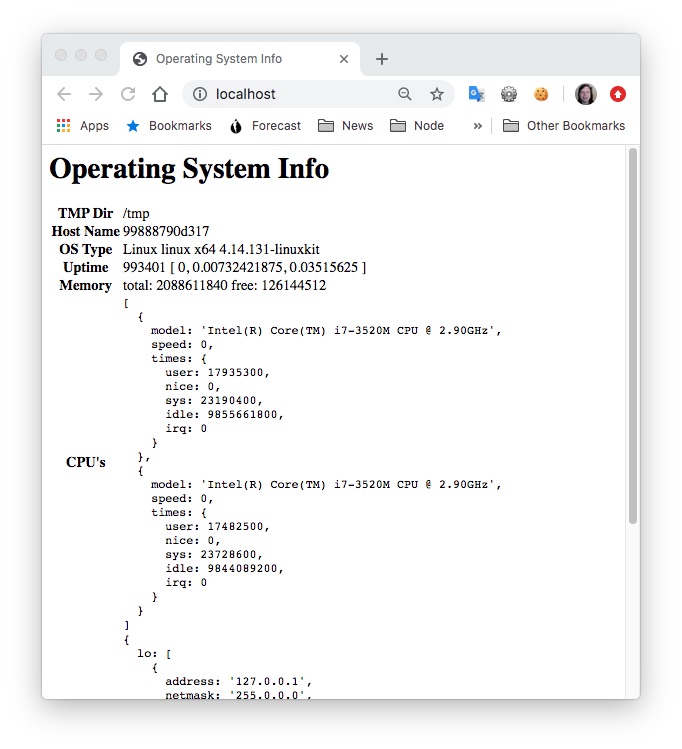

During the time the Node.js/NGINX stack is running, we can visit http://localhost to see this:

Something to notice is that the host computer is macOS, and even though the application is running on the same host it is reporting itself as a Linux system. That's because Docker runs the container in a virtualized Linux environment.

An alternate is:

$ docker-compose start

Starting nginx ... done

Starting app ... done

$ docker-compose stop

Stopping nginx ... done

Stopping app ... done

This starts the stack in the background letting us manage the containers without keeping them as a foreground task in a terminal session.

A pre-requisite to running docker-compose start is for the Docker containers to be built. One way is with Docker Compose:

$ docker-compose build

Building nginx

Step 1/2 : FROM nginx:stable

---> 8c5ec390a315

Step 2/2 : COPY nginx.conf /etc/nginx/nginx.conf

---> 9b1a94eabec8

Successfully built 9b1a94eabec8

Successfully tagged aws-ecs-nodejs-sample_nginx:latest

Building app

Step 1/3 : FROM node:13

---> 7aef30ae6655

Step 2/3 : COPY server.js /

---> Using cache

---> bec0004d3171

Step 3/3 : CMD [ "node", "/server.js" ]

---> Using cache

---> 7930b8b2a698

Successfully built 7930b8b2a698

Successfully tagged aws-ecs-nodejs-sample_app:latest

This rebuilds both container images.

Another method to build the containers is to add a package.json to the parent directory containing:

{

"scripts": {

"build": "npm run build-app && npm run build-nginx",

"build-app": "cd app && docker build -t app:latest .",

"build-nginx": "cd nginx && docker build -t nginx:latest ."

}

}

This is used solely to let us run shell scripts rather than to manage a full Node.js project. We run the build this way:

$ npm run build

> @ build /.../nodejs/aws-ecs-nodejs-sample

> npm run build-app && npm run build-nginx

> @ build-app /.../nodejs/aws-ecs-nodejs-sample

> cd app && docker build -t app:latest .

Sending build context to Docker daemon 3.584kB

Step 1/3 : FROM node:13

---> 7aef30ae6655

Step 2/3 : COPY server.js /

---> Using cache

---> a9f3ab1557f9

Step 3/3 : CMD [ "node", "/server.js" ]

---> Using cache

---> d1d1db4cffda

Successfully built d1d1db4cffda

Successfully tagged app:latest

> @ build-nginx /.../nodejs/aws-ecs-nodejs-sample

> cd nginx && docker build -t nginx:latest .

Sending build context to Docker daemon 3.584kB

Step 1/2 : FROM nginx:stable

---> 8c5ec390a315

Step 2/2 : COPY nginx.conf /etc/nginx/nginx.conf

---> Using cache

---> 833ff43f54cd

Successfully built 833ff43f54cd

Successfully tagged nginx:latest

Instead of using docker-compose to build, this uses the normal docker build command.

We can inspect the images:

$ docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

aws-ecs-nodejs-sample_nginx latest 9b1a94eabec8 About a minute ago 127MB

aws-ecs-nodejs-sample_app latest 7930b8b2a698 About a minute ago 943MB

A number of other containers will be shown because of how Docker works.

If we start the containers we can inspect them:

$ docker-compose start

Starting nginx ... done

Starting app ... done

$ docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

44500dfdecd9 abd277f8bd6e "nginx -g 'daemon of…" 21 hours ago Up 2 seconds 0.0.0.0:80->80/tcp nginx

496f747c24f5 aws-ecs-nodejs-sample_app "docker-entrypoint.s…" 21 hours ago Up 3 seconds 0.0.0.0:3000->3000/tcp app

$ docker-compose stop

Stopping nginx ... done

Stopping app ... done

$ docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

44500dfdecd9 abd277f8bd6e "nginx -g 'daemon of…" 21 hours ago Exited (0) 12 seconds ago nginx

496f747c24f5 aws-ecs-nodejs-sample_app "docker-entrypoint.s…" 21 hours ago Exited (137) 2 seconds ago app

Notice that the STATUS column changed from "Up # seconds" to "Exited (0) 12 seconds ago".

Something to notice is that app is directly visible on port 3000. This is a potential security hole, and one reason to use NGINX in front of a NodeJS app is to provide a security shield.

In docker-compose.yml change the definition for the app container to:

app:

build: ./app

container_name: app

networks:

- thenet

# Do not publish

# ports:

# - '3000:3000'

This will not publish the port.

$ docker-compose up --build

....OR...

$ docker-compose build

...

$ docker-compose start

Starting nginx ... done

Starting app ... done

$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

99888790d317 aws-ecs-nodejs-sample_app "docker-entrypoint.s…" 28 seconds ago Up 4 seconds app

fef398e3aa88 aws-ecs-nodejs-sample_nginx "nginx -g 'daemon of…" 57 seconds ago Up 4 seconds 0.0.0.0:80->80/tcp nginx

Notice that port 3000 is no longer exported from the app container.

Summary

While this is a trivial example it should give one a start on deploying multi-tier applications using Docker.

One next step is to graduate to using Docker Swarm since it can automatically scale the number of service containers depending on traffic. Or, alternatively, one can explore other container orchestration techniques like Kubernetes or AWS ECS.