; Date: Sat Jan 20 2024

Tags: Linux »»»»

Amazon's AWS S3 (Simple Storage Service) offers bulk data storage on "the cloud". With the correct software, we can access S3 services from AWS (or compatible providers), on Linux or macOS, using command-line tools, or mounting it as a filesystem.

S3 is a convenient data storage service that's accessed by the S3 API. The typical use is a server-side application to store or retrieve data. But, that API can be used by any kind of application. This includes command-line utilities or filesytem drivers to access "objects" in S3 storage in a manner similar to files in a filesystem.

The compelling idea for AWS S3 is data storage where the maximum size is not limited, and which can be accessed from anywhere on the Internet. It's different from a disk where there is a hardware-imposed limit on the partition size. S3 buckets grow as you add data, and shrink as you delete data. The S3 provider takes care of allocating disk space, with your monthly fee depending on data transfer (bandwidth consumption) and the amount of stored data.

While S3 is described as an "object storage service", it acts somewhat like a disk drive containing files in a filesystem. One starts by creating a "Bucket" which, as the name implies, is a container for "Objects". The objects are indexed with a key, and an optional version number.

Roughly speaking, the bucket is a disk drive, the object is a file, and the key is the file name. But don't confuse this with a normal filesystem. For example keys (file names) are simply keys and are not a hierarchy.

While AWS invented S3, the API has been implemented by open source projects. Multiple 3rd party cloud providers offer object storage services compatible with S3. Additional S3 implementations include open source command-line tools and filesystem drivers.

This article is focusing on accessing S3 buckets from the command-line environment of Linux, macOS and possibly Windows computers. The S3 bucket service may be AWS or any other provider. In this case the DreamObjects service from DreamHost is used.

S3 usage architecture - buckets, objects, keys

In S3 nomenclature, the "bucket" is what it sounds like, a software thingy into which you store data. In S3, data is stored as "Objects" rather than "Files". The AWS documentation describes these as:

- An object is a file and any metadata that describes the file.

- A bucket is a container for objects.

Objects are identified with a key, which is a string that must be unique within the bucket. There is also an optional version identifier to support storing multiple revisions of an object. Hence, the combination of bucket, key, and version, identifies a specific version of a specific object.

Typically object keys use file-system-like names like "photos/puppies/generalissimo-bowser.jpg".

Creating an S3 storage bucket

There is of course in the S3 API a function to create buckets. One can write custom software for this purpose.

Or, more likely, you go to the web control panel provided by the S3-compatible service you're using. This could be at aws.amazon.com, backblaze.com, dreamhost.com, etc. In my case, I am using the DreamObjects service provided by Dreamhost.

One logs in to their Dreamhost panel, and navigates to the Cloud Services section. To get started, you enable the DreamObjects service, after which there is a control panel to use. For other providers, you navigate to the corresponding control panel, and click on the correct buttons to manage your S3 buckets.

The first step is to create a key pair. These are tokens with which you access the S3 service. These are the access_key and secret_key. It's important to carefully protect these keys. They are essentially a user-name and password for your S3-compatible service. Anyone who gets these keys can do anything they like with your S3 storage.

The access_key and secret_key are used by software for every S3 API call to authenticate your software.

The bucket has a name, like test-bucket-dsh-1. The bucket name has to be unique across all users of the S3 system you're using. Hence, it's useful to include a unique string with your bucket name. For example "dsh" is my initials.

There are attributes and settings to configure on buckets. AWS supports a comprehensively long list of settings. On DreamObjects, you can declare whether a bucket is public or private, and you can assign a domain name.

Using S3cmd to access S3 buckets from Linux, macOS, and maybe Windows

S3cmd is open source software for interacting with S3-compatible storage sytems. It runs on Linux or macOS systems. A commercial version, S3Express, is available for Windows.

The official

installation instructions says that s3cmd is available through the package management systems of most Linux systems, as well as via MacPorts or Homebrew on macOS.

For example, on Ubuntu/Debian, these packages are available:

$ apt-cache search s3cmd

s3cmd - command-line Amazon S3 client

s4cmd - Super Amazon S3 command line tool

Um,

S4cmd is an alternate that claims to support more features or something.

Since S3cmd is in the Ubuntu/Debian repositories, run:

$ sudo apt-get update

$ sudo apt-get install s3cmd

For macOS using MacPorts:

$ sudo port install s3cmd

It can also be installed with PIP:

$ sudo pip install s3cmd

Lastly, you can clone the

GitHub repository and run the

s3cmd from that directory. The python setup.py install command is useful in this case. This method may work on Windows.

Once installed, you must configure s3cmd:

$ s3cmd --configure

Enter new values or accept defaults in brackets with Enter.

Refer to user manual for detailed description of all options.

Access key and Secret key are your identifiers for Amazon S3. Leave them empty for using the env variables.

Access Key: ####################

Secret Key: ########################################

Default Region [US]:

Use "s3.amazonaws.com" for S3 Endpoint and not modify it to the target Amazon S3.

S3 Endpoint [s3.amazonaws.com]: objects-us-east-1.dream.io

Use "%(bucket)s.s3.amazonaws.com" to the target Amazon S3. "%(bucket)s" and "%(location)s" vars can be used

if the target S3 system supports dns based buckets.

DNS-style bucket+hostname:port template for accessing a bucket [%(bucket)s.s3.amazonaws.com]: %(bucket)s.objects-us-east-1.dream.io

Encryption password is used to protect your files from reading

by unauthorized persons while in transfer to S3

Encryption password:

Path to GPG program [/usr/bin/gpg]:

When using secure HTTPS protocol all communication with Amazon S3

servers is protected from 3rd party eavesdropping. This method is

slower than plain HTTP, and can only be proxied with Python 2.7 or newer

Use HTTPS protocol [Yes]:

On some networks all internet access must go through a HTTP proxy.

Try setting it here if you cannot connect to S3 directly

HTTP Proxy server name:

New settings:

Access Key: ####################

Secret Key: ########################################

Default Region: US

S3 Endpoint: objects-us-east-1.dream.io

DNS-style bucket+hostname:port template for accessing a bucket: %(bucket)s.objects-us-east-1.dream.io

Encryption password:

Path to GPG program: /usr/bin/gpg

Use HTTPS protocol: True

HTTP Proxy server name:

HTTP Proxy server port: 0

Test access with supplied credentials? [Y/n] y

Please wait, attempting to list all buckets...

Success. Your access key and secret key worked fine :-)

Now verifying that encryption works...

Not configured. Never mind.

Save settings? [y/N] y

Configuration saved to '/home/ubuntu/.s3cfg'

The answers in this case are for the DreamObjects service. Adjust for the particulars of the service you're using.

Listing available S3 buckets with s3cmd

The web dashboard for your S3 provider lists the available buckets. But:

$ s3cmd ls

2024-01-08 10:42 s3://bucket-1

2024-01-08 10:50 s3://bucket-david

2024-01-19 11:32 s3://bucket-robert

2024-01-07 21:08 s3://site1-backups

2024-01-19 19:37 s3://test-bucket-dsh-1

2024-01-08 08:26 s3://site2-backups

Creating and deleting S3 buckets with s3cmd

To create a bucket:

$ s3cmd mb s3://my-new-bucket-name

Bucket 's3://my-new-bucket-name/' created

$ s3cmd mb s3://backups

ERROR: Bucket 'backups' already exists

ERROR: S3 error: 409 (BucketAlreadyExists)

The subcommand name mb stands for make bucket. If the bucket name is not unique, you'll see an error like this. Uniqueness of bucket names is not limited to your own buckets. Instead the bucket name must be unique across the entire S3 service you're using. When I tried to create a backups bucket, someone else already had a bucket by that name.

To delete a bucket:

$ s3cmd rb s3://my-new-bucket-name

Bucket 's3://my-new-bucket-name/' removed

$ s3cmd rb s3://backups

ERROR: Access to bucket 'backups' was denied

ERROR: S3 error: 403 (AccessDenied)

$ s3cmd rb s3://doez-nottt-exizt

ERROR: S3 error: 404 (NoSuchBucket)

The name rb is short for remove bucket. This also demonstrates some error conditions. For example the bucket named backups is owned by someone else. Since I do not have the access keys, I cannot delete the bucket.

Manipulating objects in an S3 bucket with s3cmd

Storing files in an S3 bucket:

$ s3cmd put file.txt s3://test-bucket-dsh-1/prefix/to/file.txt

$ s3cmd put --recursive . s3://test-bucket-dsh-1/service/

The first copies an individual file, storing it with the key prefix/to/file.txt.

The second copies (uploads) a directory tree, storing each with the prefix service/.

To view all the objects whose key begins with a given prefix:

$ s3cmd ls --recursive s3://test-bucket-dsh-1

2024-01-19 20:42 1350 s3://test-bucket-dsh-1/service/.github/workflows/github-ci.yml

2024-01-19 20:42 832 s3://test-bucket-dsh-1/service/.gitignore

2024-01-19 20:42 246 s3://test-bucket-dsh-1/service/Dockerfile

2024-01-19 20:42 707 s3://test-bucket-dsh-1/service/README.md

2024-01-19 20:42 0 s3://test-bucket-dsh-1/service/__init__.py

2024-01-19 20:42 101 s3://test-bucket-dsh-1/service/config.py

2024-01-19 20:42 0 s3://test-bucket-dsh-1/service/notification/__init__.py

2024-01-19 20:42 341 s3://test-bucket-dsh-1/service/notification/notification.py

2024-01-19 20:42 735 s3://test-bucket-dsh-1/service/notification/test_notification_service.py

2024-01-19 20:42 703 s3://test-bucket-dsh-1/service/notification/test_notification_storage.py

2024-01-19 20:42 14 s3://test-bucket-dsh-1/service/requirements.txt

2024-01-19 20:42 1843 s3://test-bucket-dsh-1/service/run.py

2024-01-19 20:42 0 s3://test-bucket-dsh-1/service/utils/__init__.py

2024-01-19 20:42 380 s3://test-bucket-dsh-1/service/utils/response_creator.py

This is intentionally similar to files in a file-system. The --recursive option on a regular file system traverses down a list of directories. With S3 there are no directories to traverse. Instead, it is finding all objects whose keys begin with the named prefix.

$ s3cmd get s3://test-bucket-dsh-1/service/README.md r.md

download: 's3://test-bucket-dsh-1/service/README.md' -> 'r.md' [1 of 1]

707 of 707 100% in 0s 797.02 B/s done

$ s3cmd get --recursive s3://test-bucket-dsh-1/service/

The get subcommand retrieves a file. Adding the --recursive option retrieves every object matching the named prefix.

$ s3cmd del --recursive s3://test-bucket-dsh-1/service/

The del subcommand deletes the named objects.

There are several more subcommands, and a lot more options. But, these are the essential commands and is more than enough to get started.

Mounting S3 buckets natively on Linux using s3fs

As cool as S3cmd is, the S3 bucket is in a separate bubble universe from the host filesystem. It means you cannot use regular command-line utilities to deal with objects stored on S3. Instead, you're interacting with those objects through the good graces of S3cmd.

S3fs is a FUSE-based filesystem provider that allows you to mount S3 buckets as if it's a regular filesystem. You then use normal Linux system APIs and normal commands to deal with objects stored in an S3 bucket.

Another limitation of S3cmd is that you cannot use multiple S3 bucket providers. With S3fs, each file system mount can use different access/secret keys, and different provider URLs.

Additionally, the S3fs project includes a Docker container that can bring an S3-based file system into the Docker environment.

Installing the S3 FUSE-based filesystem driver, S3fs

The

S3fs GitHub repository has instructions for installing packages on many Linux variants, as well as macOS, Windows and FreeBSD.

For Ubuntu/Debian:

$ sudo apt install s3fs

For macOS using MacPorts:

$ sudo port install s3fs

Keep an eye out for instructions at the end of the MacPorts build process. It wants you to link a file into /Library.

Setting up S3 access credentials for S3fs

Next, we need to set up access credentials. While the credentials can be passed on the command line, it is theoretically better to store them in a file. If nothing else, this avoids storing access credentials in the shell history.

S3fs can use credentials stored in an AWS Credentials file. But, it also has its own password file format.

echo ACCESS_KEY_ID:SECRET_ACCESS_KEY > ${HOME}/.passwd-s3fs

chmod 600 ${HOME}/.passwd-s3fs

This stores the access and secret keys in a file in the home directory, protecting it to be unreadable by anyone but the file owner. (knock on wood)

Mounting an S3 bucket as a Linux file system with S3fs

The basic usage to mount an S3 bucket as a file system is:

s3fs mybucket /path/to/mountpoint \

-o passwd_file=${HOME}/.passwd-s3fs

The bucket name, mybucket, does not have the s3:// prefix as for S3cmd. It's just the bucket name. The directory /path/to/mountpoint must already exist, and can be any directory in the filesytem.

$ s3fs test-bucket-dsh-1 ~/test-bucket \

-o passwd_file=${HOME}/.passwd-s3fs \

-o host=https://objects-us-east-1.dream.io

To mount from a non-AWS service, use either the host= or url=- option. As shown here, we are accessing the bucket named earlier from DreamObjects.

Mounting an S3 bucket as a macOS file system with S3fs

Because it's the same software, s3fs, running on macOS or Linux, the command-line is precisely the same. You set up the passwd-s3fs file in precisely the same way, and you run the commands precisely the same.

On Ubuntu, mounting the S3 bucket causes an entry to appear in the Nautilis left-hand sidebar. On macOS, no item shows up in the left-hand sidebar of Finder.

Running df -h we do see this output:

s3fs@macfuse0 8.0Ti 0Bi 8.0Ti 0% 0 4294967295 0% /Users/david/s3/shared

s3fs@macfuse1 8.0Ti 0Bi 8.0Ti 0% 0 4294967295 0% /Users/david/s3/david

Further, command-line tools can view the files in the S3 bucket. But, no item is listed in the Finder window.

Is it because the mountpoint is not in /Volumes? To test that question, change the mountpoint to /Volumes/NNNNNN-s3. To do this requires a change:

$ sudo mkdir /Volumes/NNNNN-s3

$ sudo s3fs test-bucket-dsh-1 /Volumes/NNNNN-s3 ... # as above

Making directories and mounting filesystems in /Volumes requires using sudo.

BUT -- PROBLEMS ENSUED -- See the next section

Accessing files in an S3 bucket using S3fs

Once mounted it shows up as a regular filesystem:

$ df -h

Filesystem Size Used Avail Use% Mounted on

tmpfs 96M 980K 95M 2% /run

/dev/sda1 4.7G 1.7G 3.1G 35% /

tmpfs 476M 0 476M 0% /dev/shm

tmpfs 5.0M 0 5.0M 0% /run/lock

/dev/sda15 105M 6.1M 99M 6% /boot/efi

tmpfs 96M 4.0K 96M 1% /run/user/1000

s3fs 16E 0 16E 0% /home/ubuntu/test-bucket

Note that this is inside a VPS managed by Multipass. The filesystem type is s3fs, and it is available under a regular filesystem path.

By the way, what unit of measurement is 16E?

$ ls -R ~/test-bucket/service/

...

When typing this command, try using shell file completion. It. Just. Works.

You're not using the ls subcommand provided by s3cmd. You're using the regular Linux ls command. Every other command works the same as for any other file system.

For example:

$ find ~/test-bucket/ -type f -name '*.py' -print

/home/ubuntu/test-bucket/service/__init__.py

/home/ubuntu/test-bucket/service/config.py

...

The s3cmd tool would have to create a find command. With S3fs, the regular find command is used. The same is true for every other command.

Unmounting an S3 filesystem using S3fs

The use of normal Linux commands extends to unmounting the S3 file system. Instead of having an s3fs command to unmount the S3 bucket, you just use the regular umount command:

$ umount ~/test-bucket

$ df -h

...

On Unix/Linux/macOS/etc the umount command is how you unmount a mounted filesystem. It. Just. Works. You can run a normal command like df -h to check that the filesystem is mounted, and unmounted, correctly.

Mounting S3 buckets using /etc/fstab

On Linux and many other Unix-like systems the file /etc/fstab describes the filesystems which are to be mounted. There are a lot of options for entries in this file. We'll focus on a simple scenario, mounting an S3 bucket, while being resilient to a failure to mount the bucket, on an Ubuntu VPS.

The following works only for Linux. On macOS, /etc/fstabcontains a message to not use that file. Instead, macOS uses the automounter with configuration settings living in /etc/auto_master.

This requires installing the S3FS FUSE driver discussed earlier.

We need to setup the passwd-s3fs file as discussed earlier.

We need to know the bucket name, and the filesystem location where you want it to appear.

When ready with this information, append something like this line to the end of /etc/fstab

test-bucket-dsh-1 /buckets/test-bucket fuse.s3fs _netdev,allow_other,nofail,use_path_request_style,url=https://objects-us-east-1.dream.io,passwd_file=/home/ubuntu/.passwd-s3fs 0 0

The first item is the name for the bucket. As with the earlier examples, test-bucket-dsh-1 is the name for a test bucket.

The second item is the filesystem location. It seemed that /buckets might be a good location to mount S3 buckets. Change this to suit your preferences.

The third item is the filesystem time, in this case fuse.s3fs.

The fourth item is a long list of options.

The nofail option ensures that if the S3 bucket fails to mount that the system can continue booting as normal.

The url= option is required for specifying a non-AWS S3 server, as discussed earlier.

The file= option lists the pathname of the passwd-s3fs file.

Once you've finished this, run the command:

$ sudo mount -a

If all is well no messages are printed, and running df -h shows the filesystem has been mounted.

While the filesystem is mounted, normal commands can be used for access.

To unmount the filesystem:

$ sudo umount /buckets/test-bucket

The usage of mount and umount is extremely normal.

This fstab entry attempts to mount the S3 bucket when the system boots, and leaves it mounted the entire time the system is running. You may prefer to mount the bucket intermittently. If so, add noauto to the options list, then run mount and umount as desired. The mount command changes a little to sudo mount /buckets/test-bucket, however.

Mounting S3 buckets on Linux using rclone

Rclone is a very cool tool whose primary purpose is data synchronization. The project website starts by saying:

Rclone is a command-line program to manage files on cloud storage. It is a feature-rich alternative to cloud vendors' web storage interfaces. Over 70 cloud storage products support rclone including S3 object stores, business & consumer file storage services, as well as standard transfer protocols.

Rclone has powerful cloud equivalents to the unix commands rsync, cp, mv, mount, ls, ncdu, tree, rm, and cat. Rclone's familiar syntax includes shell pipeline support, and --dry-run protection. It is used at the command line, in scripts or via its API.

The documentation does go over all those features. I have not personally tried any of this, but only used its ability to mount remote filesystems on Linux and macOS.

There is a command-line version of Rclone. I have only used the Rclone GUI.

On Ubuntu you can find this:

$ apt-cache search rclone

rclone - rsync for commercial cloud storage

rclone-browser - Simple cross platform GUI for rclone

Installing the rclone-browser package provides the GUI, and also installs the rclone CLI.

Information about Rclone Browser is on its website,

https://kapitainsky.github.io/RcloneBrowser/, which also includes links to downloads for Linux, macOS and Windows. I have only tested Ubuntu and macOS.

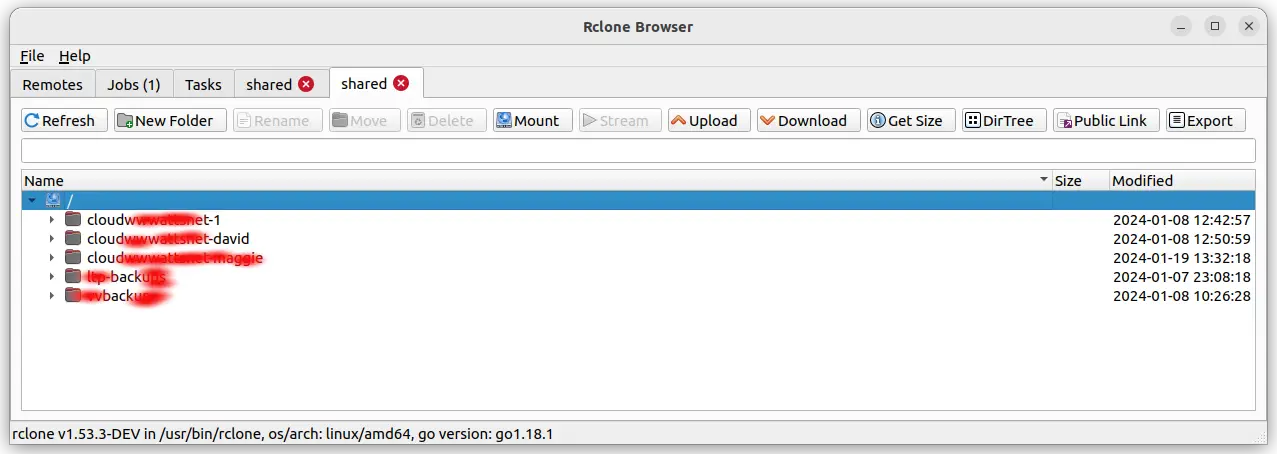

On my Ubuntu laptop, Rclone Browser looks like this:

This shows the remote file-systems I've configured on this system. Rclone supports a very long list of systems, including S3 services.

To configure a new remote system connection, start by pressing the Configure button.

Up pops a terminal window in which you must interact with a semi-arcane textual interaction to configure a new connection. This interaction is actually the

rclone config command at work. While the interaction is nonintuitive, it doesn't suck.

Once you've defined a connection it shows up on this page.

Double-click on an S3 connection and you'll be taken to a screen like this:

Each row shown here is a bucket.

To mount a bucket on your system, click on the bucket name then click the Mount button. That will bring up a file/directory selector dialog. You navigate that to your chosen mount directory, then click the Select button. After a short time the S3 bucket will be mounted.

On Ubuntu, the mount appears in the Nautilis file manager, and as a regular filesystem mount when running df -h.

As discussed earlier with the s3fs approach, all command-line utilities and other normal software see's the S3 bucket as a regular filesystem. Everything just works as expected.

An issue is that, with DreamObjects over the Internet connection available to me (using a 4G-based WiFi Hotspot), performance is very slow. With RClone, performance is much better than with S3fs.

When you're done with the file-system unmount it as normal with sudo umount /path/to/mount/point.

Mounting S3 buckets on macOS using rclone

Everything written for the Linux version of RClone Browser applies for the macOS version.

Mounting S3 buckets in Docker containers, and to the host system

An alternate is the efrecon/s3fs Docker container (

GitHub). This container has S3fs installed, allowing S3 buckets to be available in this or other Docker containers, and to the host system.

This is adapted from the README:

docker run -it --rm \

--device /dev/fuse \

--cap-add SYS_ADMIN \

--security-opt "apparmor=unconfined" \

--env "AWS_S3_BUCKET=test-bucket-dsh-1" \

--env "AWS_S3_ACCESS_KEY_ID=############" \

--env "AWS_S3_SECRET_ACCESS_KEY=##################" \

--env "AWS_S3_URL=https://objects-us-east-1.dream.io" \

--env UID=$(id -u) \

--env GID=$(id -g) \

-v /home/david/test-bucket:/opt/s3fs/bucket:rshared \

efrecon/s3fs:1.78 \

empty.sh

This is again mounting my test S3 bucket. There's some serious Docker wizardry going on here.

The --device option makes a host system device file available inside the container. In this case, it is the FUSE driver.

The --cap-add and --security-opt options inform Docker about other special requirements. The UID and GID variables pass in user IDs so that file ownership is mapped correctly.

The bucket name, access keys, and host name, are passed as environment variables. It's possible to store these as files, and pass the file name through other environment variables.

The -v option makes the S3 bucket available inside the container as /opt/s3fs/bucket, and as /home/david/test-bucket in the host system.

If you prefer a Docker Compose file:

version: "3.3"

services:

s3fs:

stdin_open: true

tty: true

devices:

- /dev/fuse

cap_add:

- SYS_ADMIN

security_opt:

- apparmor=unconfined

environment:

- AWS_S3_BUCKET=test-bucket-dsh-1

- AWS_S3_ACCESS_KEY_ID=########

- AWS_S3_SECRET_ACCESS_KEY=###################

- AWS_S3_URL=https://objects-us-east-1.dream.io

- UID=1000

- GID=1000

volumes:

- /home/david/test-bucket:/opt/s3fs/bucket:rshared

image: efrecon/s3fs:1.78

command: empty.sh

The command empty.sh keeps the container running, otherwise it would exit immediately. As long as the container is active, the file system is actively mounted from your S3 service.

But, what can you do with this? It's a method to mount S3 file systems without installing s3fs on your computer. Beyond that?

The README suggests that the S3 volume can be shared with other Docker containers.

This attempt was made:

version: "3.3"

services:

s3fs:

stdin_open: true

tty: true

devices:

- /dev/fuse

cap_add:

- SYS_ADMIN

security_opt:

- apparmor=unconfined

environment:

- AWS_S3_BUCKET=nginx-dir-dsh-1

- AWS_S3_ACCESS_KEY_ID==########

- AWS_S3_SECRET_ACCESS_KEY==###################

- AWS_S3_URL=https://objects-us-east-1.dream.io

- UID=1000

- GID=1000

volumes:

- /home/david/test-bucket:/opt/s3fs/bucket:rshared

image: efrecon/s3fs:1.78

command: empty.sh

nginx:

volumes:

- /home/david/test-bucket:/usr/share/nginx/html:rw

image: nginx

ports:

- 1080:80

networks: {}

In this case the second container is NGINX. Its volumes directive is the normal method for hosting web content from the host computer via NGINX. If /usr/share/nginx/html is empty, the NGINX container autopopulates it with a simple website.

There are two recommended method for sharing volumes between Docker containers. The first is the volumes_from directive, which did not work. The second is to use the same volume source from two containers.

In this case, the host directory /home/david/test-bucket is common to both. The intent is for the s3fs container to mount that directory from S3, and for the nginx container to then use that directory and autopopulate it with content.

Unfortunately, opening http://localhost:1080 in the browser gives an NGINX error 403 Forbidden. Further, in the logging output is this message:

docker-test-bucket-nginx-1 | 2024/01/20 12:50:08 [error] 22#22: *1 directory index of "/usr/share/nginx/html/" is forbidden, client: 192.168.128.1, server: localhost, request: "GET / HTTP/1.1", host: "localhost:1080"

Exploring this leads down a rabbit hole of file system permissions issues.

The above Compose file is close to what's required to share an S3 volume between containers. In this case, permissions problems get in the way.

Summary

We can easily use S3 buckets from the command line of Linux, macOS, and Windows systems.

Of the methods explored, Rclone Browser is the most convenient. It gives you a point-and-click interface that results in a normal file-system mount. Further, it can be used with a huge list of remote systems. Finally, the performance and robustness is much better than S3fs.

S3FS is a good alternative. Once the S3 bucket is mounted its objects act mostly like normal files, and we can use any command or tool we like. It's also possible to add S3fs mounts into /etc/fstab.

However, Rclone is a far more complete solution. The command-line tool contains a long list of commands for copying or synchronizing files across cloud storage systems.

Since we just spent two paragraphs praising Rclone, one paragraph praising S3FS, and zero paragraphs on s3cmd, that should answer any question about which tool to use. Rclone does everything that either s3cmd or S3FS can do, with more reliability and performance than either.