; Date: Fri Jun 29 2018

Tags: Big Brother »»»» Face Recognition »»»» Machine Learning »»»» Mass Violence »»»»

The immediate reaction on Thursday to the Capitol Gazette newspaper shooting was that the climate of violence encouraged by Pres. Trump, all the vindictive aimed at newspapers by Trump, has resulted in some lunatic acting out the grievances inflamed by Trump's rhetoric. In other words, some Patriot believing the Capitol Gazette to be a hub of Liberal nonsense could have decided to shoot the place up. Supposedly the shooter, to mess up law enforcement efforts, had damaged his fingerprints, and did not carry identification, making us think maybe this was some kind of terror attack. At the end of the day, "Facial Recognition Technology" was used to identify the shooter as a person with a long-standing personal grievance against the staff of that newspaper, because of reporting by that newspaper about that person. In other words, there is no nefarious dark scheme here. While it is true that Pres. Trump, and others in his administration, are inflaming the public against journalists with eery similarity to the Nazi Germany playbook, this particular case is a straight-up personal grievance. And, we have proof that while we should be concerned about big brother implications of facial recognition technology, in some cases like this one the technology is higly useful.

Over the last few days I've written about potential misuses of facial recognition technology. Employees at Amazon and Google are concerned that their work is being put into the hands of federal spy agencies to use in spying activities. Both companies have seen a internal protests, by employees, over the joint collaboration with federal agencies.

At Amazon, the concern is that video analysis software, the AWS Rekognition service, is being used by immigration enforcement folks at the USA-Mexico border and is involved with the separation of children from parents. See Amazon employees demand stopping face-recognition contract with federal government

At Google, the concern is that video analysis software in Google's Cloud Computing platform is being used by the Department of Defense, under Project Maven, to analyze drone footage in war zones. Google's employees do not see Google's business as being a War business. See Google employees demand AI rules to preclude use as weapons

According to an

NY Times report about the Capitol Gazette shooting, the shooter "refused to cooperate with the authorities or provide his name," and that he was identified by using facial recognition technology.

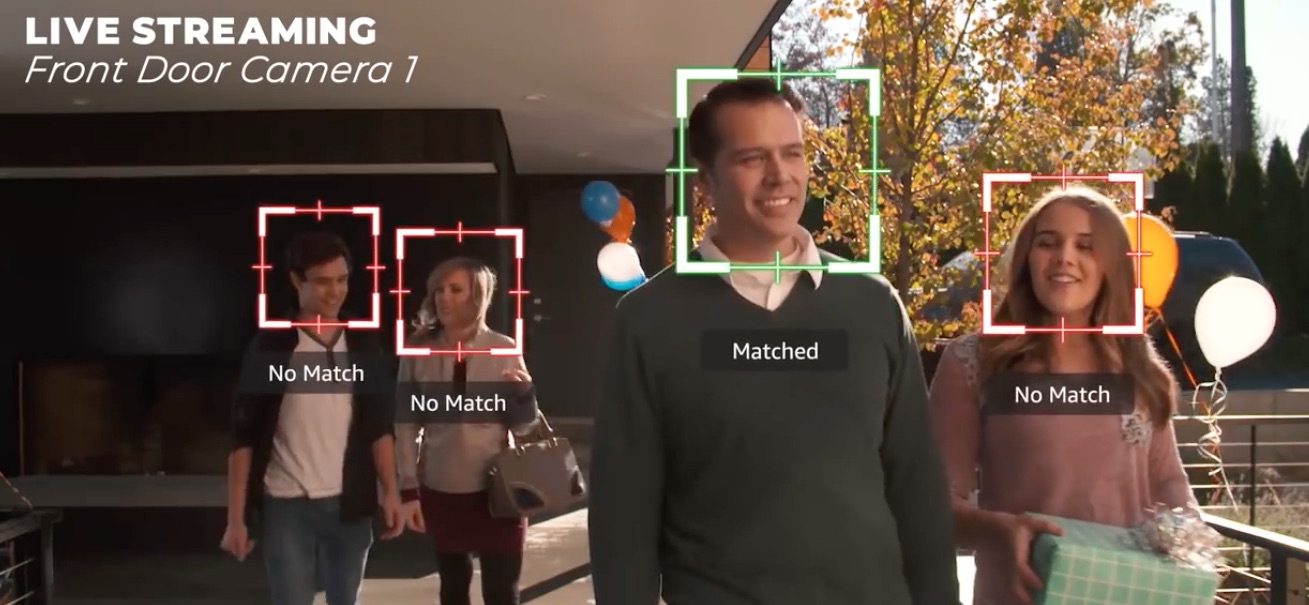

The technology that both Amazon and Google employees are concerned over is facial recognition technology. The software for this is open source, so "anybody" could install it on their own cloud service and get on with running facial recognition tasks. However Amazon, Google, and Microsoft as well, and perhaps other cloud service providers, have prepackaged services where anyone with a credit card can set up facial recognition software and get on with the business of scanning photos or videos.

One type of use is fairly innocent - social media networks can use facial recognition to automatically tag people who are visible in a picture. Suppose you post a picture of a family gathering. The social media network (e.g. Facebook) wants to figure out who's in the picture, and let THEM know their picture was just posted. What the social network does is scan the picture to find faces, then use facial recognition technology to scan its database of pictures and determine whose faces those are.

It's nice that Facebook helps your friends know you posted a picture. This serves the common goal of social media networks, to get more social interaction happening through the social media network.

But - step back if you will - what this means is technology exists such that anyone with a large body of images can now scan those pictures or videos to identify objects or people in other pictures or images.

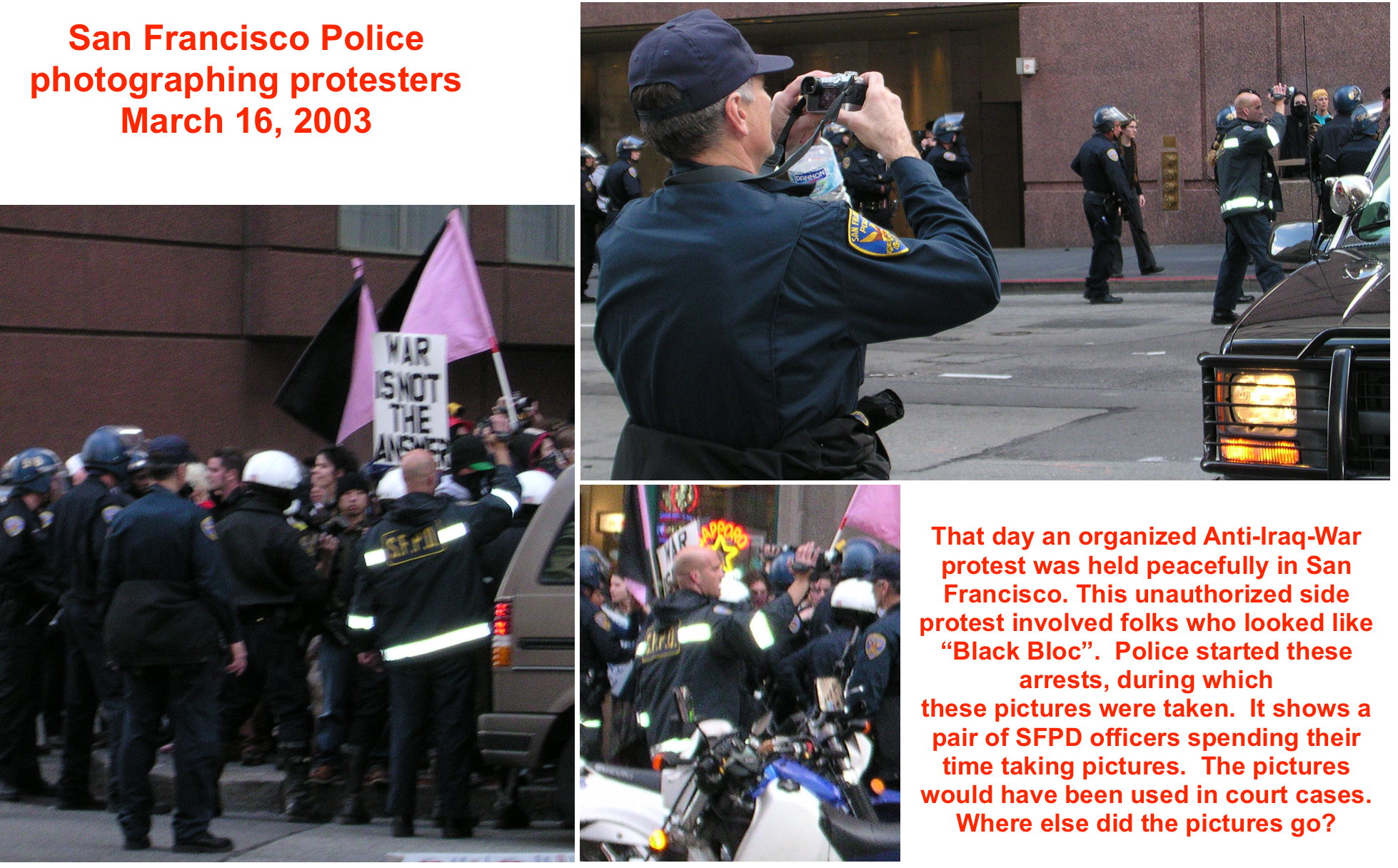

Law Enforcement in general have been collecting pictures for a long time. It's possible that USA law enforcement groups have worked together to build a shared database of images to scan.

The obvious primary purpose was to use the pictures and video (the bald guy is carrying a camcorder) as evidence in any trial. But ... those images could easily be copied into a database and reused in other instances.

Consider all the ways Governments collect our pictures. Drivers licenses for example, as well as at Immigration and Customs Enforcement checkpoints. Every person entering the USA at an airport is photographed.

It means law enforcement agencies will have already collected a large body of images. With facial recognition software easily available from cloud service providers, those images can be used by law enforcement to quickly identify folks.

It's claimed this type of software offers real time person and object identification.

Meaning - you could be pulled over for a traffic stop, and the "body camera" carried by the police officer could be sending pictures over WiFi through a relay station in the patrol car to a cloud service, that automatically identifies you via facial recognition technology. What if the software makes an incorrect identification, and suddenly the police are treating you as public enemy #1 when you're nothing of the sort?

In the case named above - facial recognition technolgy has had a positive benefit. We can all breathe a sigh of relief that this event does not necessarily mean there will be a wave of violence against journalists. While the person identified as the shooter tried to evade recognition by police, the police quickly identified the person with facial recognition technology, leading quickly to an obvious motive. The person identified as the shooter had a long simmering grievance against that newspaper because of their reporting of his actions.

What's good is the context we're living in currently. President Trump and his Administration has been attacking journalists and the media for several years now. The pattern is extremely similar to how the Nazi Party in Germany in the 1920's and 1930's also attacked journalists and the media, attacking their credibility in order to perpetrate lies justifying their subsequent actions. Many are worried the escalating rhetoric against journalists will turn into violence against journalists.

Therefore having a positive identification of the identified shooter, and to have his motive, may defuse tensions around this event.

At the same time there is this issue about facial recognition technology, and whether it will be deployed in a helpful or harmful way.