; Date: Tue Sep 04 2018

Tags: Big Data »»»» Big Science »»»» Large Hadron Collider »»»»

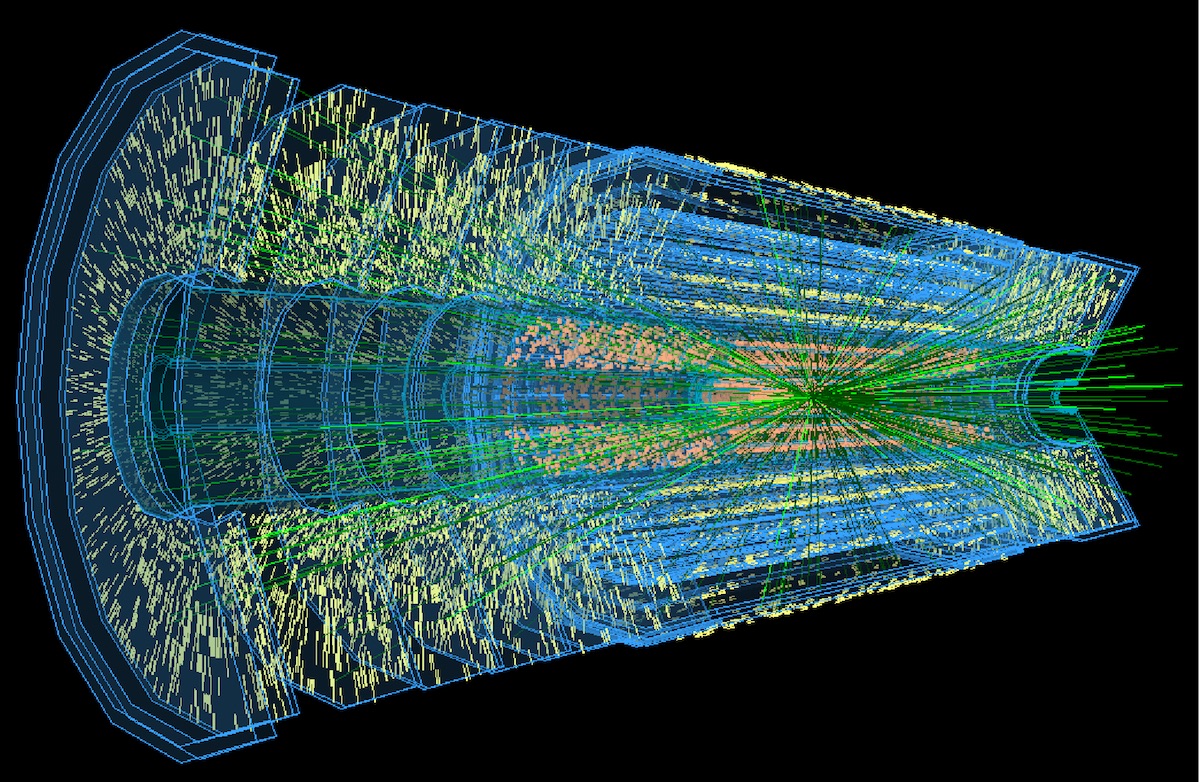

The upgraded Large Hadron Collider is expected to produce 1 billion particle collisions every second, of which only a fraction are the collisions that physicists want to study. To weed out the collisions of interest will require a big data software system on a massive scale to essentially find the needle in the haystack. That is, the interesting collisions in a flood of humdrum everyday collisions. When the LHC is fully operational in 2026 scientists will be completely buried in data unless they develop better tools. To that end the NSF is funding a new institute explicitly for that purpose.

Bogdan Mihaila describes how the Large Hadron Collider is helping reveal new insights into the universe, and the need for software to handle the extreme challenges presented by its upgrade in 2026. Mihaila is the program officer overseeing the $25 million NSF award to create the Institute for Research and Innovation in Software for High-Energy Physics (IRIS-HEP) that will develop those software solutions. Credit: NSF

Peter Elmer of Princeton University explains how the Large Hadron Collider (LHC) is a Higgs boson factory, and why hunting through its data is like looking for a needle in a haystack. Elmer is the principal investigator on the $25 million NSF award to create the Institute for Research and Innovation in Software for High-Energy Physics (IRIS-HEP), which will develop software solutions for the data challenges presented by future upgrades to the LHC. Credit: NSF

News Release 18-071

New institute to address massive data demands from upgraded Large Hadron Collider

Research will develop software for the biggest Big Data challenges

September 4, 2018

The National Science Foundation (NSF) has launched the Institute for Research and Innovation in Software for High-Energy Physics (IRIS-HEP), a $25 million effort to tackle the unprecedented torrent of data that will come from the High-Luminosity Large Hadron Collider (HL-LHC), the world's most powerful particle accelerator. The upgraded LHC will help scientists fully understand particles such as the Higgs boson -- first observed in 2012 -- and their place in the universe.

When the HL-LHC reaches full capability in 2026, it will produce more than 1 billion particle collisions every second, from which only a few will reveal new science. A tenfold increase in luminosity will drive the need for a tenfold increase in data processing and storage, including tools to capture, weed out and record the most relevant events and enable scientists to efficiently analyze the results.

"Even now, physicists just can't store everything that the LHC produces," said Bogdan Mihaila, the NSF program officer overseeing the IRIS-HEP award. "Sophisticated processing helps us decide what information to keep and analyze, but even those tools won't be able to process all of the data we will see in 2026. We have to get smarter and step up our game. That is what the new software institute is about."

In 2016, NSF convened a

Scientific Software Innovation Institute conceptualization project to gauge the LHC data challenge.

Through numerous workshops and two community position papers, the S2I2-HEP project brought together representatives from the high-energy physics and computer science communities. These representatives reviewed two decades of successful LHC data-processing approaches and discuss ways to address the opportunities that lay ahead. The new software institute emerged from that effort.

"High-energy physics had a rush of discoveries and advancements in the 1960s and 1970s that led to the Standard Model of particle physics, and the Higgs boson was the last missing piece of that puzzle," said Peter Elmer of Princeton University, the NSF principal investigator for IRIS-HEP. "We are now searching for the next layer of physics beyond the Standard Model. The software institute will be key to getting us there. Primarily about people, rather than computing hardware, it will be an intellectual hub for community-wide software research and development, bringing researchers together to develop the powerful new software tools, algorithms and system designs that will allow us to explore high-luminosity LHC data and make discoveries."

Bringing together multidisciplinary teams of researchers and educators from 17 universities, IRIS-HEP will receive $5 million per year for five years, with a focus on developing both innovative software and training the next generation of users.

"It's a crucial moment in physics," adds Mihaila. "We know the Standard Model is incomplete. At the same time, there is a software grand challenge to analyze large sets of data, so we can throw away results we know and keep only what has the potential to provide new answers and new physics."

Co-funded by NSF's Office of Advanced Cyberinfrastructure in the Directorate for Computer and Information Science and Engineering (CISE) and the NSF Division of Physics in the Directorate for Mathematical and Physical Sciences (MPS), IRIS-HEP is the latest NSF contribution to the 40-nation LHC effort. It is the

third OAC software institute, following the Molecular Sciences Software Institute and the

Science Gateways Community Institute.