; Date: Sat Feb 23 2019

Tags: Git »»»»

ARM-based CPU's are going into not just mobile devices. ARM is popular in cell phones and the like because it is low power. That, and heat dissipation, is proving popular in server computers as the world moves towards cloud computing. Linus Torvalds recently suggested that software developers need ARM-based PC's to go along with ARM-based servers.

The argument is derived from the experience with x86 servers.

Linus Torvalds noted that a software developer working on a certain CPU type, will prefer to deploy their code to a similar CPU. A developer on an x86 laptop will want to deploy to an x86 server, in other words.

Hence, if the chosen deployment target is am ARM-based server, then the developer had better have good access to an ARM-based PC in their office.

There's a certain logic to this, and the first attached video makes a good case. BUT...

To someone, like Linus Torvalds, whose primary coding experience is in C or C++ that argument makes a lot of sense. Such coders, and it's not just C/C++, are closely tied to the operating system and may even be using CPU-based coding techniques to wring out more performance.

A typical web app developer is highly insulated from the hardware. They're coding in Java, Python, Node.js (JavaScript), PHP, Ruby, or Go. All of those are higher level languages and the programmer is guaranteed compatibility across operating systems and hardware.

Even more, Docker or Kubernetes is a popular thing nowadays. With containerization, the developer is not using the MacOSX version of Java or whatever then crossing their fingers and hoping it will be similar enough. Instead the developer is using the same operating system inside the Docker image on their laptop as will be deployed on the server.

Does that mean a software developer deploying Docker images to ARM can develop those Docker images on their x86 laptop?

That's a bit of a leap of faith again. Let me explain.

Before production deployment one needs a test deployment, and before the test deployment one needs the developer deployment on their laptop. When the developer is happy with their code, they promote it to the next level, and eventually it bubbles up to production deployment.

As soon as a stack difference shows up along the line a risk is introduced. The developer could develop on an x86 laptop, then in the build/CI system a corresponding ARM container is built.

The claim would be that the ARM image is similar enough to the x86 image because the high level language (Java or whatever) promises compatibility across platforms.

But, there will obviously be implementation/behavior differences between Node.js for x86 and Node.js for ARM (or whatever). What if the code triggers one of those differences, which is missed because the developer isn't developing on ARM but on x86.

If the Quality Engineering department is worth the salary you're paying them, they'll raise a red flag.

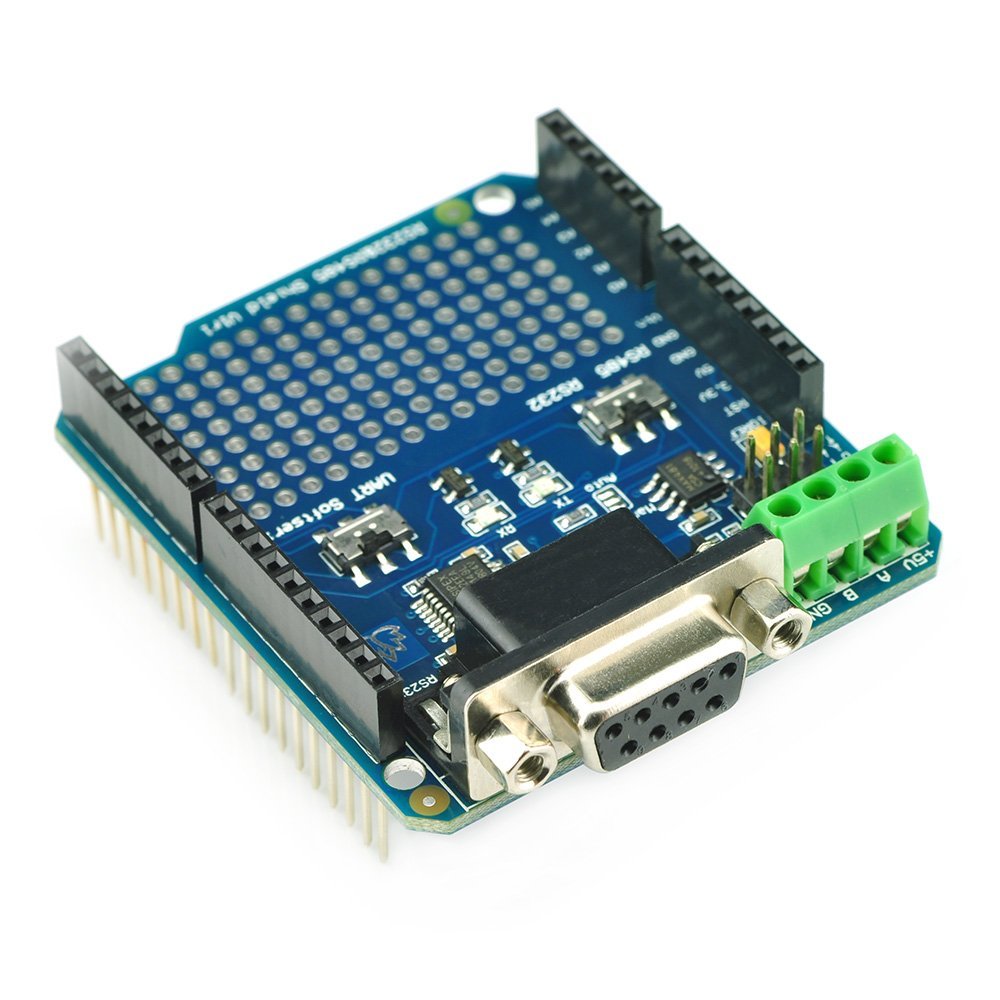

Obviously app developers for ARM-based mobile devices are already using both device emulators on their laptop, and real devices in their office for development/testing. But in this article we're talking about a different model, where traditional web app developers are now deploying to ARM-based systems of a different breed. These ARM-based systems live in server rooms on 19" racks just like todays x86-based servers.

Developers deploying to x86 servers can run VirtualBox/etc and/or Docker/etc on their laptop to be assured of developing/testing on the same environment as is used in deployment.

What is needed by the developer who deploys to ARM-based server systems?