; Date: Sat Jan 15 2022

Tags: Blogging »»»»

Automation is coming to a task we thought was unautomatable - writing. The AI researchers at OpenAI have developed a trained "AI Model" from zillions of text documents with which new written works can be easily generated. The demo shows news articles being automatically generated from sports commentary transcripts. What writing task will they go after tomorrow?

In a

video excerpted from the Microsoft Ignite conference in November 2021, Microsoft demo'd how their new hosted OpenAI GPT-3 service can be used to generate text which can be used in news articles. In the demo, automatically written summaries were generated from the transcript of play-by-play commentators at an NBA Basketball game. Summaries were generated from each section of the game, then from those summaries were automatically generated for each quarter. They then breathlessly said that Content Editors would now have more options for producing content from events like basketball games.

Microsoft, quit avoiding the story. The effect of this is that the journalists who currently write game summaries will be out of a job once this is deployed to newsrooms.

Artificial intelligence synthetically generating articles and other text?

But, will it stop there? It turns out GPT-3 has a lot more capability beyond summarizing the play-by-play of basketball games. Instead it is capable of a wide range of tasks around processing text. What about the possibility of using GTP-3, or tools like it, to synthesize text like blog posts and news articles?

The examples shown on the OpenAI website (the makers of the GPT-3 tool) include several examples of synthesizing text in limited forms, but not for larger pieces of text. An example most GMAIL users are familiar with is completing sentences as we type some text, as GMAIL began doing a year or so ago. A tool that could dynamically help writers generate text would be useful, and speed up the writing process. But another GPT-3 example is generating a recipe from a list of ingredients.

What if there are tools for synthetically generating things like news articles and blog posts? There's a lot of news articles screaming out for automatic generation. For example, some useful business news reporting can be automatically derived from the standardized documents businesses are required to file with the SEC. Or articles about the weather can be automatically generated from US Weather Service data.

There are journalists currently writing those articles. If those articles were instead automatically generated, would those journalists be out of a job, or would they be reassigned to other beats?

Automatically generated blog posts could be a spammers paradise

For the entire existence of the World Wide Web, the Web has been plagued with websites whose owners seek to cheat their way to the top of search results. One trick is to "spin" the words of an article, meaning to automatically rewrite an article such that it contains similar information but in a different presentation that the search engines won't detect as duplicate content. In general such people want to automatically generate lots of postings targeting their preferred keywords.

For example, there's close to a zillion sites presenting "conservative news" that is supposedly unfiltered truth, which is instead blatantly fake made up stories. Some of these are operated by the Troll Farms of Russia to spread outright propaganda (some history of the Russian Troll Farms), but others are home grown American operations spreading the Conservative version of truth. I sometimes look at these websites and one thing which stands out is how nearly identical stories will appear on dozens of sites all at the same time, using nearly identical words, and nearly identical titles, and often linking to each other. I haven't studied it closely, but it appears to be an attempt to game the search engines to raise the visibility of their content.

In another instance, I found a job listing calling for writers to create news articles for a Conservative news blog. The writers were to be generating several articles per day, with talking points for the articles to be supplied to the writers.

In these and many similar scenarios, certain types of website authors want to generate lots of articles on chosen topics. Their goal is juicing search engine results towards their websites. They don't care about flooding the Internet with low quality content.

Current examples of synthetically generating news articles

This isn't a far off in the future plausible issue. There are a couple examples currently available.

Go to your favorite search engine (mine is DuckDuckGo) and type in "AI article generator" as your search phrase. When I did that, dozens of different tools showed up. For example, one of them offered to dynamically generate "About Us" descriptions in several topic areas, such as this one for a "Sustainable Energy Fund":

Sustainable Energy Fund was established to achieve the sustainable development goals of the Sustainable Development Goals, set by the United Nations Development Programme. We are committed to the development of innovative technologies as a whole to achieve the long-term goals of sustainable development. The fund is currently running a programme to provide grants to the most promising innovators in sustainable development. There are several energy-saving and energy-saving technologies that we have identified and are trying to implement and we will continue to pursue innovation and research.

Another site offered a service to write complete articles. As a teaser, their site includes a form with which to generate a small article. It asks for a few sentences to act as the prompt from which it generates your article. My prompt was this:

Automatically generating blog posts by supplying an idea and some sample words. Goal is generating website content to target specific keywords or topics.

From which it generated this:

Automatically generating blog posts by supplying an idea and some sample words. Goal is generating website content to target specific keywords or topics. Also check out some examples. Use it to develop or improve your website. For example, for this challenge, you can also use it for a landing page or blog post. The blog posting can be a single-topic or multi-post topic. When creating the blog, it's important to consider your audience, and the audience's interests. You can add a topic to the post, as long as you make it interesting. Using this blog will help you build an audience and

Another website billed itself as an AI Article Spinner. Taking the text just generated, the spun version is this:

Create blog posts automatically by providing an idea and a few sample words. The goal is to create keyword- or topic-targeted content on the web. See also some examples. Use it to improve or enhance your site. For example, you can also use it for a landing page or blog post for this challenge. A blog post can be thematic or multi-thematic. When creating a blog, it is important to consider your audience and audience interests. You can add a topic to the post if you are interested. Using this blog will help you build an audience and

You should see the point now. That with software, juiced with artificial intelligence, it's possible to generate a lot of fairly reasonable-looking text. An organization could have parallel websites hosting similar content, with automatically generated unique text on each. It's literally an old idea, that could live on thanks to artificial intelligence based tools.

In other words, there are plenty of existing tools in this area. One tool, Jarvis AI, has a high profile. But that simple search turns up plenty of choices. GPT-3 is not the only tool in this niche of natural language artificial intelligence models.

GPT-3 is a huge step forward in textual natural language processing

What's different is how GPT-3 is an example of advances in artificial intelligence and natural language processing. It is an AI model with far more training than any other natural language model before it.

At the heart of this is Generative Pre-trained Transformer 3 (GPT-3), which is a trained artificial intelligence model of natural language. This is the latest of the GPT-n series of language models, and was trained on somewhere around 1 zillion pieces of text. I wasn't able to find a precise number of documents, but it is in the billions of texts, so "zillion" seems like the appropriate unit of measure. Another unit of measure is the amount of computation that went into training the model. The operative word here is not years of comput time, but eons of compute time.

What this means is the GPT-3 AI model has more "training" than other AI models, and is described at being "eerily good" at generating very good text with only a few inputs. One area, summarization, is named as a specialty. But, it can be used in a wide array of configurations. Because GPT-3 is trained with so much data, its output will be much better than anything else.

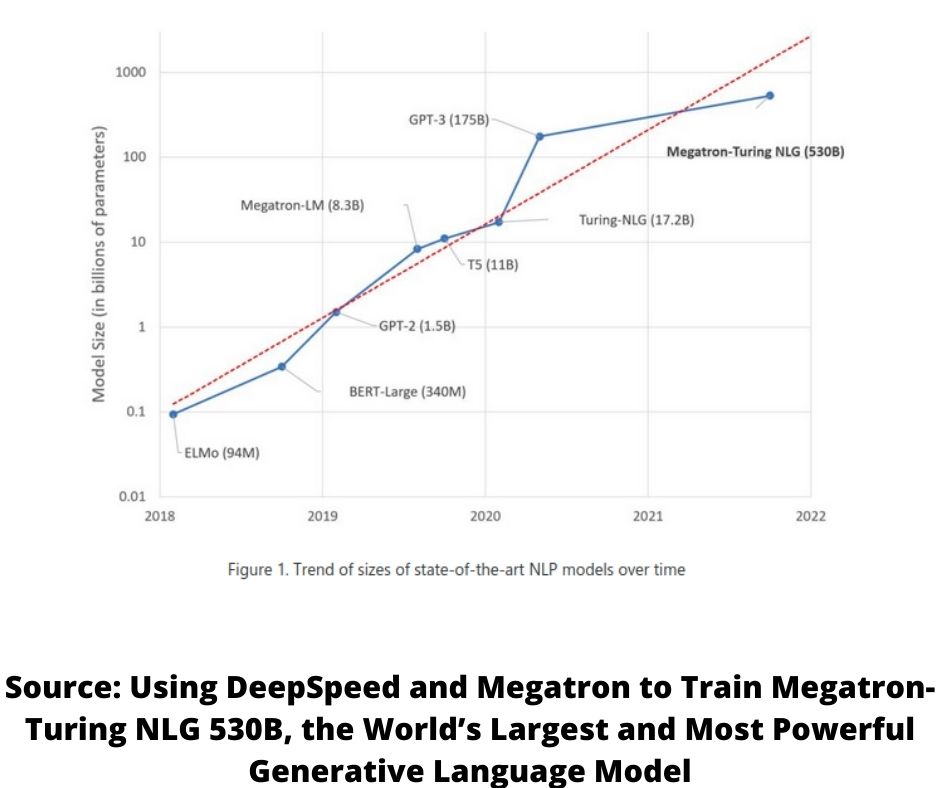

This image, from

Microsoft Research going over another project, to generate a natural language model from an even bigger dataset. For this project, Microsoft worked closely with NVIDIA to develop custom GPU computing hardware that could handle the size of this model. Notice that GPT-3 has 175 billion "parameters", while Megatron-Turing NLG (that's really the name) will have 530 billion parameters. And, because of the hardware advances in this project, we can expect even larger AI models in the future.

But, let's get back to GPT-3 since it is a functioning system. Anyone can go to the OpenAI website and access the API for GPT-3 right away. You get $18 in free usage credit that lasts for 3 months, and after that you're paying for access. Their

list of examples includes chatbots, answering questions, language translation, summarizing difficult text into terms a second-grader can understand, and more.

Not just autogenerated text, but autogenerated software

An example of a product using GPT-3 is OpenAI Codex, which is the brains behind GitHub Copilot. This is a tool that can automatically generate computer software (code) from a loosely written human description of the requirements. GitHub describes it as an artificial intelligence pair programmer. What that means is Codex can help write code - e.g. by the programmer writing a comment of what's required, and Codex then generating a function - or it can watch what the programmer does, and make suggestions. As a software engineer I can think "oh, that code can't possibly be any good". But, think about what the result will be for GPT-4 or GPT-5?

Oh.. and, take a step back a second and think about a limitation of artificial intelligence systems. They require humans to write the software that drive the systems.

What if artificially generated software can generate better artificially generated software? One generation of AI software generation could develop another generation of AI software generation, and another, and another, until...? Once AI software can generate better versions of itself, where does that leave humanity?

OpenAI GPT-3 demonstration: testing out the playground | Unscripted Coding is an in-depth discussion, including a few minutes om tje playground, and a discussion of other systems.

How to build a GPT-3 chat bot in 10 minutes shows a demo of a prepackaged service allowing one to define a chatbot, with GPT-3 in the back end, trained for a specific domain of knowledge. The video is 10 minutes long, and by the end is able to converse about a game called 5D Chess, which is Chess but with time travel.

Microsoft, Microsoft Metaverse, and OpenAI

OpenAI, the company behind GPT-3, was founded by Elon Musk (co-founder of Tesla, SpaceX, PayPal, etc) and Sam Altman (former president of Y-combinator the startup accelerator) with the mission of the betterment of mankind by amping up artificial intelligence research.

OpenAI is allowing "anyone" to use this technology through the API in their applications. Expect to see powerful language processing applications to come along shortly. But, Microsoft has an exclusive license to the actual trained model behind GPT-3. And, as we noted, Microsoft is going beyond GPT-3 to an even larger natural language model.

What does Microsoft plan for this? One obvious use would be an add-on to Microsoft Word that helps folks with their writing. Another, is that Microsoft is

hosting OpenAI on the Azure cloud, meaning that Microsoft can earn revenue from offering OpenAI service. But, Microsoft seems to have a bigger game in mind. Another project in Microsoft's pipeline is Metaverse, their own Metaverse, not the one that Facebook(er..)Meta is building.

Metaverse is a vision of a massive virtual reality environment, multiple environments actually, that will be available for people to explore and enjoy. A large part of this environment might be virtual entities to interact with, where the behavior of each entity is based on artificial intelligence. A large part of that is in turn autogenerated speech. Think of the "chat bots" that are infesting the "Service" corners of all kinds of corporate websites these days. Those chat bots obviously use some kind of artificial intelligence to drive a conversation, and the GPT-3 examples include powering chatbots.

These companies aren't doing this for the betterment of humanity, but because they don't want to have to hire pesky humans who demand vacations and health care plans. AI chat bots trained to synthesize speech are, theoretically, good enough.

One direction this metaverse thing could take is a kind of online help/instruction desk. At Apple Stores, there is the "Genius Bar" which is where Apple stations staffers who portray themselves as experts on using Apple gear. But what about a virtual Genius Bar where folks can ask questions, and get interactive instruction on using electronics gizmos?

To make it more approachable, this could be a 3D representation of something like the Genius Bar, and the "expert" behind the counter would initially be a synthetic representation of a human whose artificial intelligence is primed to converse about the devices sold by that company. The system would be programmed such that human experts would be available if the synthetic expert gets stuck. But the point is for the synthetic expert to hold a virtual representation of the product, and to walk you through its operation, so that you can SEE what to do. If they do a good enough job, you'll be able to ask natural language questions, and receive responses.

But... what about blogging and news reporting?

It looks like I wandered off topic a little bit.

I've named some possible issues above. A big one in my mind is those sites who build scammy websites with the sole intention of snagging people off search engines solely to get them to view and click on advertising. Those websites already use shady means like article spinning (which is briefly demonstrated above) to rapidly build lots of content. With AI based tools, their ability to do this will be amplified.

Similar to this are those with a political agenda and a willingness to spin up a mountain of lies to serve that agenda.

For some branches of journalism, where data is available in a structured format, AI based tools can be used to autogenerate news reports from data. This is a threat to the reporters who currently handle those tasks. Hopefully their editors will be enlightened enough to assign them to more meaningful writing tasks. I cannot imagine it is pleasant to, every day, write text summarizing corporate financial filings like quarterly reports.

We should strive to support well crafted written material.